Notifications

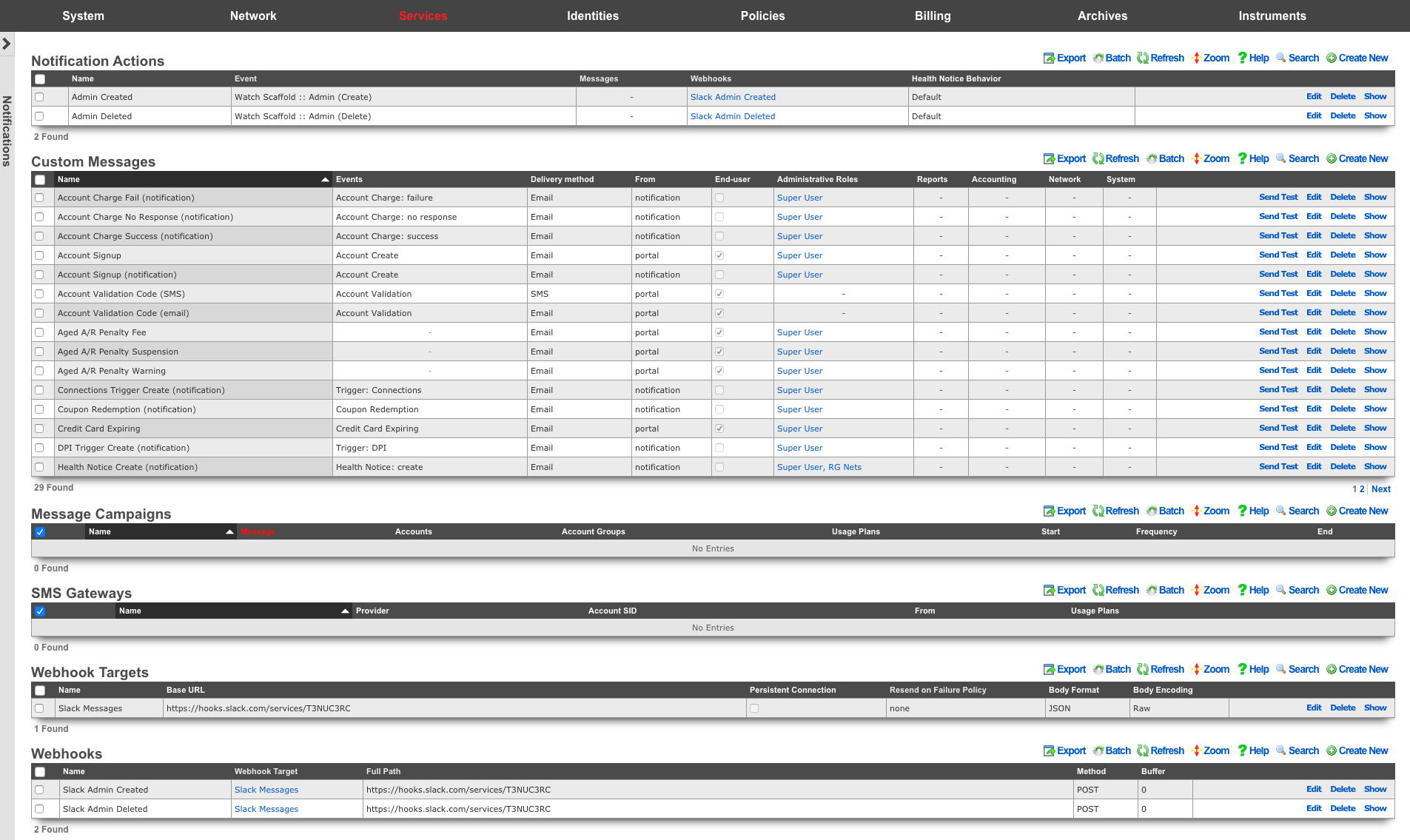

The notifications view presents the scaffolds associated with configuring templates for email, SMS, webhooks, and event health notices in response to events.

The notification actions scaffold enables clear communication to the RG end-user population, operators and administrators, as well as other systems.

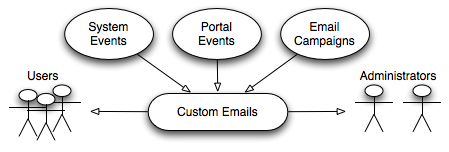

Email is one of several end-user communication methods that are used to create a complete revenue generating network strategy. Email is an important part of the feedback loop when end-users purchase services. Event-based email notifications bring confidence to the end-user by offering a "receipt" when billing events occur. In addition, email can be used for marketing and administrative communication conveyance.

The rXg also uses email as a mechanism to communicate events to administrators. Events may be end-user related such as the service purchase case discussed before, or they may be system generated. Examples of system generated events include, but are not limited to, output from daily, weekly and monthly recurring system maintenance tasks, changes to uplink and monitored node status as well as detection of internal system problems.

The rXg sends emails to administrators and end-users when certain events occur (e.g., account creation, plan selection, etc.). These event-based email notifications draw from templates defined in the custom messages template. The templates may be customized for content, look and feel as well to change the language to match the locale.

custom message templates also be used by the administrator initiated bulk email jobs. For example, the administrator may wish to send some or all end-users an email indicating a maintenance window, a reduced price service special or a paid advertisement from a partner.

The rXg email mechanism also helps operators generate revenue by enabling bulk email marketing. For example, if an operator becomes an affiliate marketing program subscriber, the operator will receive email notifications of special offers. In many cases, affiliate marketing program subscribers are given the opportunity to offer exclusive discount codes to their market base as a purchasing incentive. When an operator receives such a notification, a new email template incorporating affiliate program linkage and incentive information may be formulated and a job created broadcast to the end-user population. The operator is then issued a credit For end-users who take advantage of the opportunity described in the bulk email.

Significant operational cost reduction may also be achieved through the use of the rXg bulk email mechanism. Notification of maintenance windows, end-user surveys, promotions and well-checks may all be accomplished via the bulk email mechanism. Using the bulk email mechanism minimizes the hours utilized by support staff to communicate system and network administration events. In addition, keeping end-users informed often and ahead of time helps reduce support load in maintenance situations.

Below is an example Custom Message that reports to the recipients the amount of revenue generated in the previous 24 hour period:

<p>Good Morning,</p>

<%

rev = ArTransaction.where(['created_at > ?', 24.hours.ago]).sum(:credit)

%>

<p>Your network generated $

<b><tt><%= sprintf("%.2f", rev) %></tt></b>

yesterday. Have a nice day.

</p>

The Ruby script embedded inside the Custom Message above performs a calculation on the ArTransactions to generate a report for the recipients. Ruby scripts may also perform write operations on the database. For example, below is a Custom Message that is configured to be sent to a guest services representative on a daily basis.

<p>Good Morning,</p>

<%

todays_credential = sprintf("%x%d", rand(255), rand(9999))

scg = SharedCredentialGroup.find_by_name('Guests')

scg.credential = todays_credential

scg.save

%>

<p>Today's shared credential for guest access is:</p>

<p><b><tt><%= todays_credential %></tt></b></p>

<p>Thank you.</p>

The Ruby script embedded inside the Custom Message changes the designated Shared Credential Group to a randomly generated value and notifies the associated administators.

Adding Graphs to Custom Messages

Network, System, and Accounting graphs may be added to your custom messages in order to provide enhanced reporting emails to operators and administrators. By selecting associated Graph records in the custom messages's configuration, the system will automatically generate and attach PNG images of those graphs to the email as attachments.

Additionally, graph images may be inserted into the body of an email by calling the insert_attached_graphs method inside the body. The method takes an optional argument specifying the resolution of the image, such as_1000px*600px_ (the default). The resulting images will be stored on the rXg in a publicly accessible directory such that they may be retrieved by email clients.

For example, below is a Custom Message that inserts all associated graphs into the body of the email, separated by a line-break (<br>).

<h2>Weekly Report</h2>

<h3><%= Date.today.strftime("%a, %B %d, %Y") %></h3>

<br>

<%= insert_attached_graphs('1200px*700px') %>

If you wish to exercise greater control over the structure of your HTML email, you may provide a block to the method. The block will yield an array of hashes in the format of { graph_object => image_tag_string }. The array may be iterated over to insert each image tag into your desired HTML structure. For example:

<h2>Weekly Report</h2>

<h3><%= Date.today.strftime("%a, %B %d, %Y") %></h3>

<br>

<table>

<% insert_attached_graphs('600px*500px') do |graph_tags| %>

<% graph_tags.each do |graph, image_tag| %>

<tr> <td align="center" style="font-size:34px"><%= graph.title %></td> </tr>

<tr> <td align="center" style="font-size:14px"><%= graph.subtitle %></td> </tr>

<tr> <td><%= image_tag %></td> </tr>

<% end %>

<% end %>

</table>

Adding WLAN QR Codes to Custom Messages

QR codes may be sent as an attachment with your custom messages in order to provide an end-user with easy access to the WLAN associated to their usage plan's policy. Below is a very basic template you may add to the body of a custom email to accomplish this:

<%

raise CustomEmail::DoNotSendError unless (account = Account.find_by(login: '%account.login%'))

wlan = account.usage_plan.policy.wlan

%>

<%= qr_code_image_tag_for_email(account, wlan) %>

The policy associated with the account's usage plan must be associated with a WLAN.

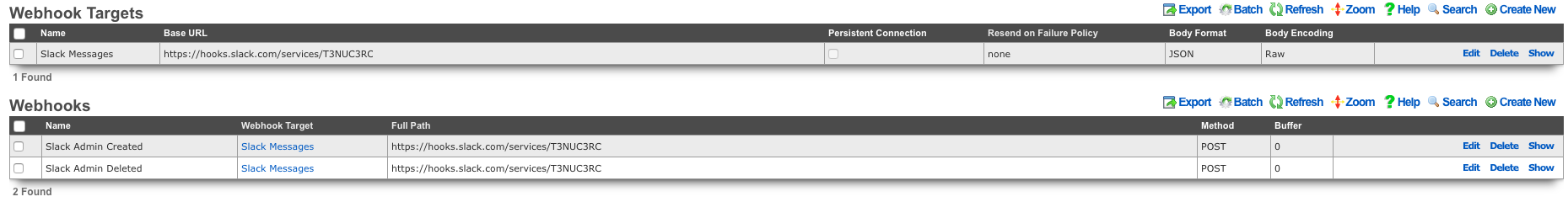

Webhooks, and Webhook Targets

The webhooks and webhook targets scaffolds enable an operator to integrate other systems with the rXg. Webhooks user defined HTTP callbacks which are triggered by specific events. The events are configured in the notification actions scaffold. When the triggered event occurs, a webhook gathers the required data (headers, and body), and sends it to the configured URL.

An entry in the webhook targets scaffold defines a set of 'global' parameters that all webhooks configured for the target will use. These include the Base URL, body formatting, error code response, as well as any global HTTP headers, or query parameters an operator may need to configure. This enables the flexibility to configure multiple unique webhooks, utilizing a common target, and eliminates the need for repeating common elements such as API-keys, or content-types on each configured webhooks

An entry in the webhooks scaffold defines the parameters for a single webhook. A webhook must have an associated webhook target, containing the base URL. The specific endpoint path is defined in the webhook scaffold entry. The path will be appended to the webhooks configured target base URL. An operator can override the base URL by starting the webook path with a /. The HTTP request method, as well as the body or data is also configured within the webhook scaffold. Any additional HTTP headers, or query parameters that are unique to the configured webhook can be added.

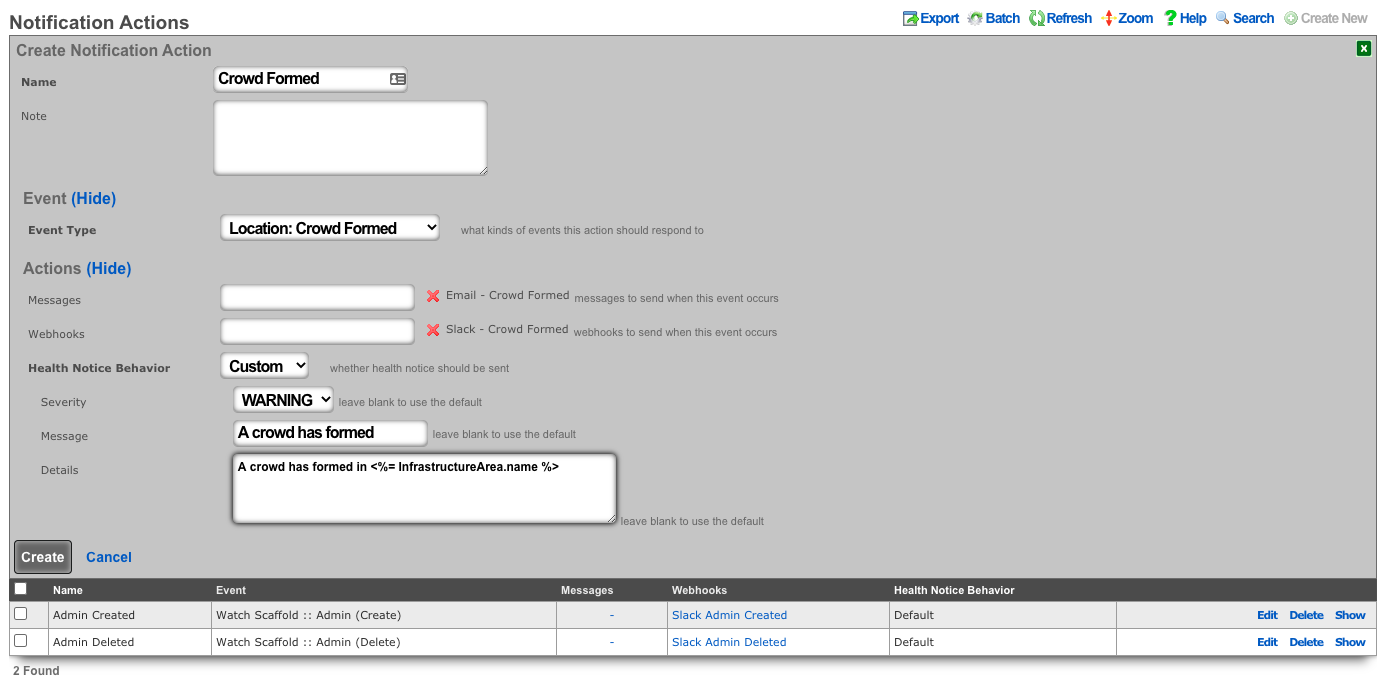

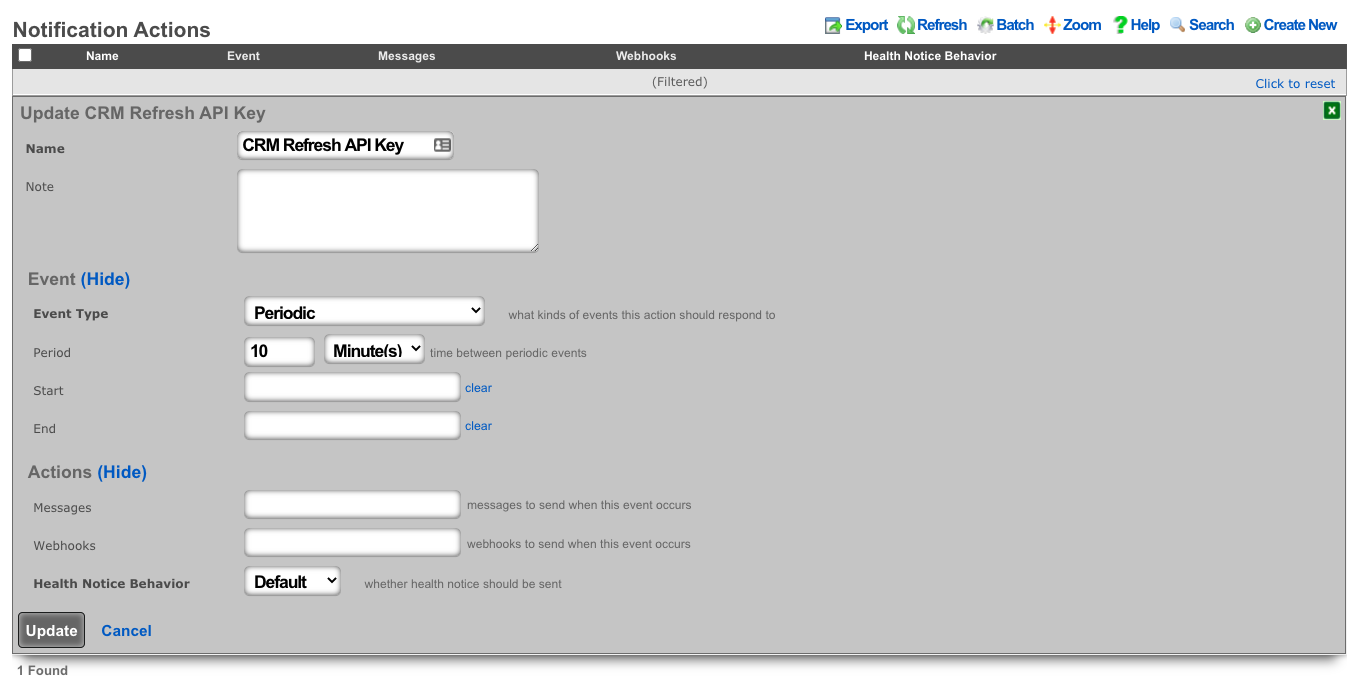

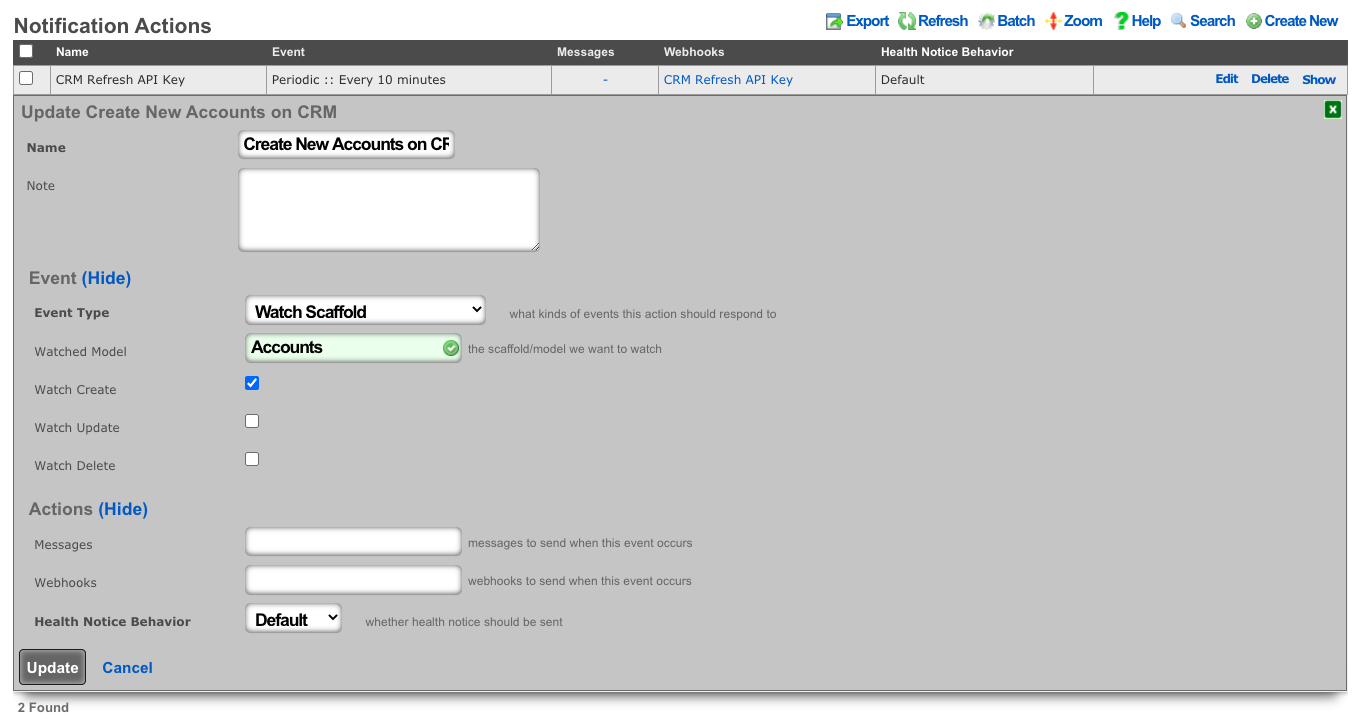

Notification Actions

An entry in the notification actions scaffold defines an event of which the rXg should complete the selected action(s). Events can include database changes, time and/or location based triggers, and more.

The name field is an arbitrary string descriptor used only for administrative identification. Choose a name that reflects the purpose of the record. This field has no bearing on the configuration or settings determined by this scaffold.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

The event type field selects what kind of events should trigger a configured action. Depending on the selection, additional options may be rendered for operator configuration. For database changes, an operator can select Watch Scaffold, choose the appropriate modifiers (create, update, and/or delete).

The messages field configures a custom message to be sent when the criteria of the event is met. The message recievers are configured in the custom messages scaffold entry.

The webhooks field defines configured webhooks to be triggerd when the criteria of the event is met.

The health notice field defines whether the system should send a health notice. Choosing default will result in the default behavior of a configured event. For instance, "Certbot Failure" is configured to send a health notice on failure. If the event does not have a default health notice action, nothing is changed, and no health notice is sent. Choosing Disabled, will override the configured default health notice the event type, and not send it. For instance, with "Certbot Failure", choosing disabled will disable a health notice from being sent. Choosing Custom allows the operator to configure or override an existing health notice. For instance, "Watch Scaffold :: Administrators" wouldn't trigger a health notice by default. Choosing custom would allow an operator to configure a health notice for this event type.

Event Type Reference

The event type field determines what triggers a notification action. Each event type category serves a distinct purpose:

Special Events

| Event Type | Description |

|---|---|

| Watch Scaffold | Triggers when database records are created, updated, or deleted. Select the model to watch and which operations (create/update/delete) should fire the action. Useful for auditing changes, syncing data to external systems, or triggering workflows on record changes. |

| Periodic | Triggers on a configurable schedule. Specify the interval (minutes, hours, days, weeks, or months) and optionally set start/end times to constrain when the action runs. Useful for scheduled reports, recurring maintenance tasks, or timed integrations. |

| Manual | Triggers only when explicitly invoked via the API or the mobile Action Button app. Useful for on-demand operations like toggling configurations, running ad-hoc scripts, or operator-initiated workflows. |

General Error Conditions

| Event Type | Description |

|---|---|

| Backend Daemon Delayed | Triggers when a backend daemon (background processing service) is running slower than expected. Indicates potential performance issues or resource constraints affecting background jobs. |

| Backend Daemon Not Responding | Triggers when a backend daemon fails to respond entirely. Indicates a critical failure requiring immediate attention to restore background processing. |

| BGP Peer Offline | Triggers when a configured BGP (Border Gateway Protocol) peer becomes unreachable. Indicates routing issues that may affect network connectivity. |

| Buffered PMS Charge Failure | Triggers when a Property Management System charge that was queued for later processing fails. Indicates billing integration issues with hospitality PMS systems. |

| Buffered Webhook Failure | Triggers when a webhook that was buffered for later delivery fails after all retry attempts. Indicates connectivity or endpoint issues with external integrations. |

| Certbot Failure | Triggers when automatic SSL/TLS certificate renewal via Certbot fails. Requires attention to prevent certificate expiration. |

| Config Template Download Failure | Triggers when the system fails to download a configuration template from a remote source. May indicate network issues or invalid template URLs. |

| Custom Health Notice Failure | Triggers when a custom health notice (configured in a notification action) fails to render due to ERB template errors. Check the template syntax in the notification action. |

| Disk Image Download Failure | Triggers when downloading a disk image (for virtualization or fleet deployment) fails. May indicate network issues or storage problems. |

| Event Response Failure | Triggers when a notification action's response (webhook, script, or message) fails during execution. The related notification action is linked to the event for debugging. |

| IPsec Tunnel Offline | Triggers when an IPsec VPN tunnel goes down. Indicates VPN connectivity issues affecting secure site-to-site communications. |

| (Domain/Host) Name Not Resolving | Triggers when DNS resolution fails for a monitored hostname. Indicates DNS configuration issues or upstream resolver problems. |

| PF Error | Triggers when the packet filter (firewall) encounters an error. May indicate rule syntax issues or resource exhaustion affecting traffic filtering. |

| Portal Asset Precompile Failure | Triggers when captive portal assets (CSS, JavaScript) fail to precompile. May affect portal appearance or functionality. |

| Portal Controller Error | Triggers when the captive portal application encounters an unhandled error. Check portal logs for details. |

| Portal Sync Failure | Triggers when synchronizing portal configuration or assets to cluster nodes or fleet devices fails. |

| Protobuf Schema Error | Triggers when there's an error with Protocol Buffer schema parsing or encoding. Affects integrations using Protobuf body format. |

| License Expiration Issue | Triggers when the system license is approaching expiration or has expired. Contact support to renew the license. |

| Infrastructure Import Error | Triggers when importing infrastructure configuration (from backup or migration) fails. Check import file format and compatibility. |

| Version is not compatible | Triggers when a version mismatch is detected, such as between cluster nodes or with external integrations. |

| Configuration out of sync | Triggers when configuration drift is detected between cluster nodes or between the database and running state. |

Cluster Error Conditions

| Event Type | Description |

|---|---|

| Cluster Node Status | Triggers when a cluster node's activity status changes (e.g., becomes unreachable or comes back online). |

| Cluster Node Replication | Triggers when database replication between cluster nodes encounters issues or falls behind. |

| Cluster Node HA | Triggers when high-availability failover events occur or when HA state changes between cluster nodes. |

| CIN Interface Warning | Triggers when the Cluster Interconnect Network interface experiences issues such as speed degradation or errors. |

General Threshold Events

These events trigger when monitored system metrics cross configured thresholds. Configure thresholds in System :: Options :: Notification Thresholds.

| Event Type | Description |

|---|---|

| Accounts Limit (%) | Triggers when the number of accounts approaches the licensed limit. Default: Warning at 85%, Critical at 95%. |

| BiNAT Pool Utilization (%) | Triggers when Bi-directional NAT IP pool usage is high. Indicates potential IP address exhaustion for NAT translations. Default: Warning at 85%, Critical at 95%. |

| CIN Speed | Triggers when Cluster Interconnect Network speed drops below expected levels. Low values indicate potential network hardware issues. Default: Critical below 1000 Mb/s. |

| CPU Core Temperature (C) | Triggers when CPU temperature exceeds safe operating levels. High temperatures may indicate cooling issues. Default: Warning at 70C, Critical at 75C. |

| DHCP Pool Utilization (%) | Triggers when DHCP address pools (larger than /28) are running low on available addresses. Default: Warning at 85%, Critical at 95%. |

| DHCP Shared Network Utilization (%) | Triggers when DHCP shared network pools (larger than /29) are running low. Default: Warning at 85%, Critical at 95%. |

| Filesystem Utilization (%) | Triggers when disk space usage is high. Critical levels may cause system instability. Default: Warning at 75%, Critical at 80%. |

| Fleet Nodes Limit (%) | Triggers when the number of fleet nodes approaches the licensed limit. Default: Warning at 85%, Critical at 95%. |

| Filesystem Utilization Squid | Triggers when the Squid proxy cache filesystem is running low on space. Default: Critical at 80%. |

| Load Average | Triggers when system load (CPU demand) is consistently high. High values indicate the system is under heavy processing load. Default: Warning at 1.5, Critical at 2.0. |

| Local IPs Limit (%) | Triggers when local IP address allocation approaches the licensed limit. Default: Warning at 85%, Critical at 95%. |

| Login Sessions Limit (%) | Triggers when concurrent login sessions approach the licensed limit. Default: Warning at 85%, Critical at 95%. |

| Memory Utilization (%) | Triggers when RAM usage is high. High memory usage may cause performance degradation or out-of-memory conditions. Default: Warning at 90%, Critical at 95%. |

| Missing Backup Servers | Triggers when no backup servers are configured or reachable. Ensures backup infrastructure is available. |

| Connection States Limit (%) | Triggers when the firewall connection state table approaches capacity. High utilization may cause new connections to be dropped. Default: Warning at 85%, Critical at 95%. |

| VLAN Utilization (%) | Triggers when VLAN address space utilization is high. Default: Warning at 85%, Critical at 95%. |

Other Threshold Events

| Event Type | Description |

|---|---|

| Location: Crowd Formed | Triggers when the number of devices in a location area exceeds the configured crowd threshold. Useful for occupancy monitoring and crowd management. Configure thresholds in Network :: Location. |

| Location: Crowd Dispersed | Triggers when a previously formed crowd disperses (device count drops below threshold). |

| Filesystem Utilization Threshold | General filesystem monitoring event for custom threshold configurations. |

| Speed Test Failed | Triggers when an automated speed test fails to complete or returns unexpected results. |

Scheduled Upgrades

| Event Type | Description |

|---|---|

| Upgrade Initiated | Triggers when a scheduled system upgrade begins. Useful for notifying operators that maintenance has started. |

| Upgrade Completed | Triggers when a scheduled system upgrade completes successfully. Confirms the system is back online with the new version. |

| Upgrade Failed | Triggers when a scheduled system upgrade fails. Requires immediate attention to assess system state and potentially rollback. |

Fleet Nodes

| Event Type | Description |

|---|---|

| Fleet Node Offline | Triggers when a managed fleet node becomes unreachable. May indicate network issues, power loss, or device failure at the remote site. |

| Fleet Node Online | Triggers when a fleet node that was previously offline comes back online. Confirms connectivity restoration. |

DPU Devices

| Event Type | Description |

|---|---|

| DPU Device Offline | Triggers when a Data Processing Unit device becomes unreachable. Indicates connectivity or device issues. |

| DPU Device SSH Credentials Invalid | Triggers when SSH authentication to a DPU device fails. Indicates credential mismatch requiring reconfiguration. |

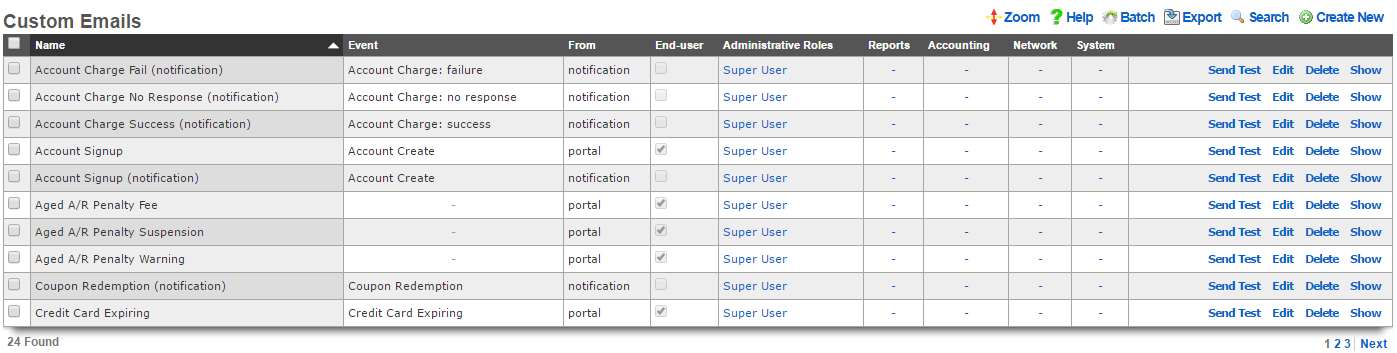

Custom Messages

An entry in the custom messages scaffold creates a template that can be used for email notifications and bulk emails originating from the rXg.

The name field is an arbitrary string descriptor used only for administrative identification. Choose a name that reflects the purpose of the record. This field has no bearing on the configuration or settings determined by this scaffold.

The event drop down associates this template with an event which will trigger an email notification to an end-user. If no event is chosen, the template is assumed to be used in only an email campaign.

The from field defines the address from which emails sent using this template will originate. This email address should lead to an account that is regularly monitored because end-users who receive emails based on this template are likely to reply with questions.

The account and admin roles check boxes enables automatic sending of emails using this custom message for event notification. If the account check box is checked, then the custom message template will be populated with the appropriate data and transmitted to the end-user when the selected event occurs. Similarly, when one or more admin roles check boxes are checked, the custom message template will be populated with the appropriate data and send to the administrators who are linked of the to the checked admin role.

The subject and body fields define the payload of the email template. Place the desired content for the subject and message into the appropriate field. Placeholders for variable substitutions begin and end with the percent sign and must refer to fields from the usage plans or accounts scaffolds. For example, %account.first_name% %account.last_name% will be substituted for the full name of the end-user being targeted by the email. See the full manual page for more information about the values available for substitution.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

Email Campaigns

An entry in the email campaigns scaffold defines a bulk email job. Once the job is complete (i.e., all emails are queued for transmission), the entry in email campaigns is automatically deleted.

The name field is an arbitrary string descriptor used only for administrative identification. Choose a name that reflects the purpose of the record. This field has no bearing on the configuration or settings determined by this scaffold.

The custom message field selects the template that will be used for this bulk email job. The template payload is defined in the custom messages scaffold.

The account groups field configures the set of end-users who will be sent the email template selected by the custom message via this job. The members of the groups are controlled by the account groups scaffold on the accounts view of the identifies subsystem.

The start at field defines the starting time and date for this job. If the value of the start at field is before the current date and time, the job will be started immediately. If the start at is set to the future, the job will start at the specified future date and time.

A job is automatically deleted after it is finished. The optional frequency field determines if this job is run more than once, and how often. The optional end at field defines when a repeat job is finished. A job with a set frequency and a blank end at field will repeat indefinitely at the configured frequency. A periodic job with a configured end at field is deleted when the time of the next iteration exceeds the end at date/time.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

SMS Gateways

The SMS Gateways scaffold enables the creation of an SMS Gateway service which will be utilized for verifying a user's mobile number for the purpose of new account signups and/or password resets.

The name field identifies this SMS Gateway in the system.

The provider field specifies which provider this SMS Gateway relates to. Select a supported provider from the list.

The from field specifies the phone number, shortcode, or Alphanumeric Sender ID (not supported in all countries) to be used when sending SMS messages through this gateway. This value must correspond to a number purchased from or ported to the provider.

The Account SID and Auth Token fields specify the API credentials used to access the provider's REST API, and may be obtained from your dashboard within the provider's website.

The Usage Plans selections determine which usage plans are configured to utilize this SMS Gateway. The usage plan must also specify a validation method which includes SMS in order for messages to be sent via SMS.

The Splash Portals selections determine which splash portals are configured to utilize this SMS Gateway for the purposes of performing password resets. Accounts with a valid mobile phone number may request a password reset token via SMS or Email, depending on the password reset method specified in the splash portal.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

For more information, see the SMS Integration manual entry.

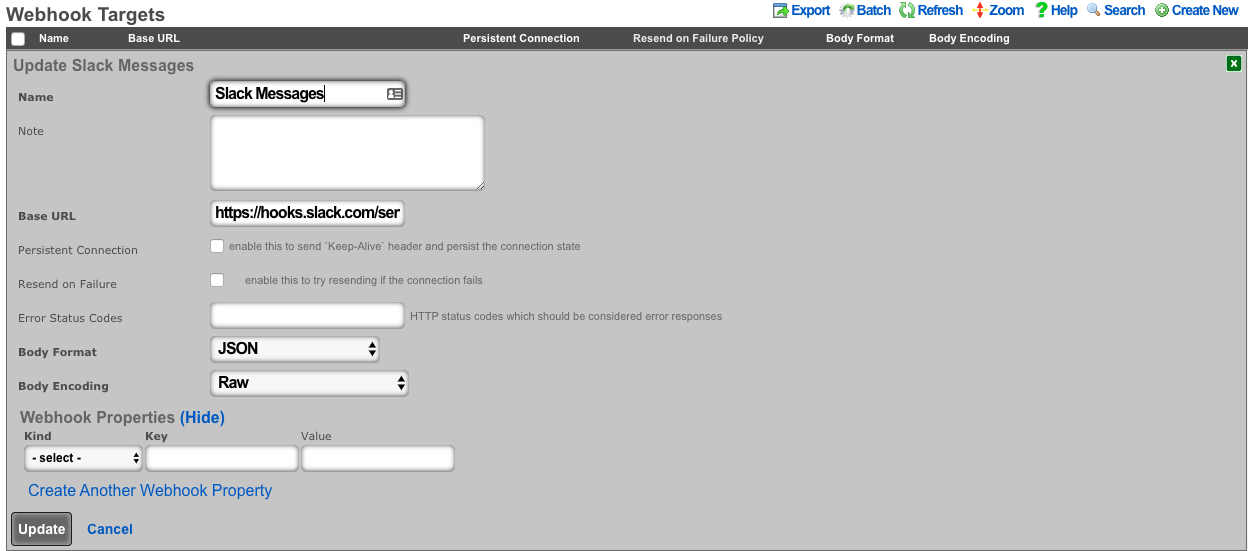

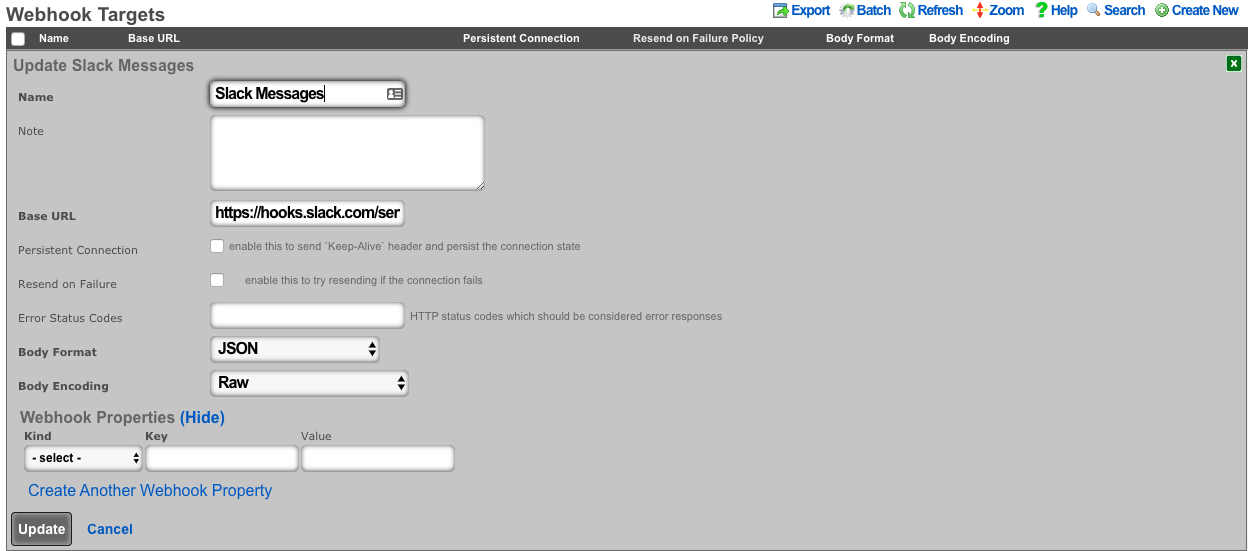

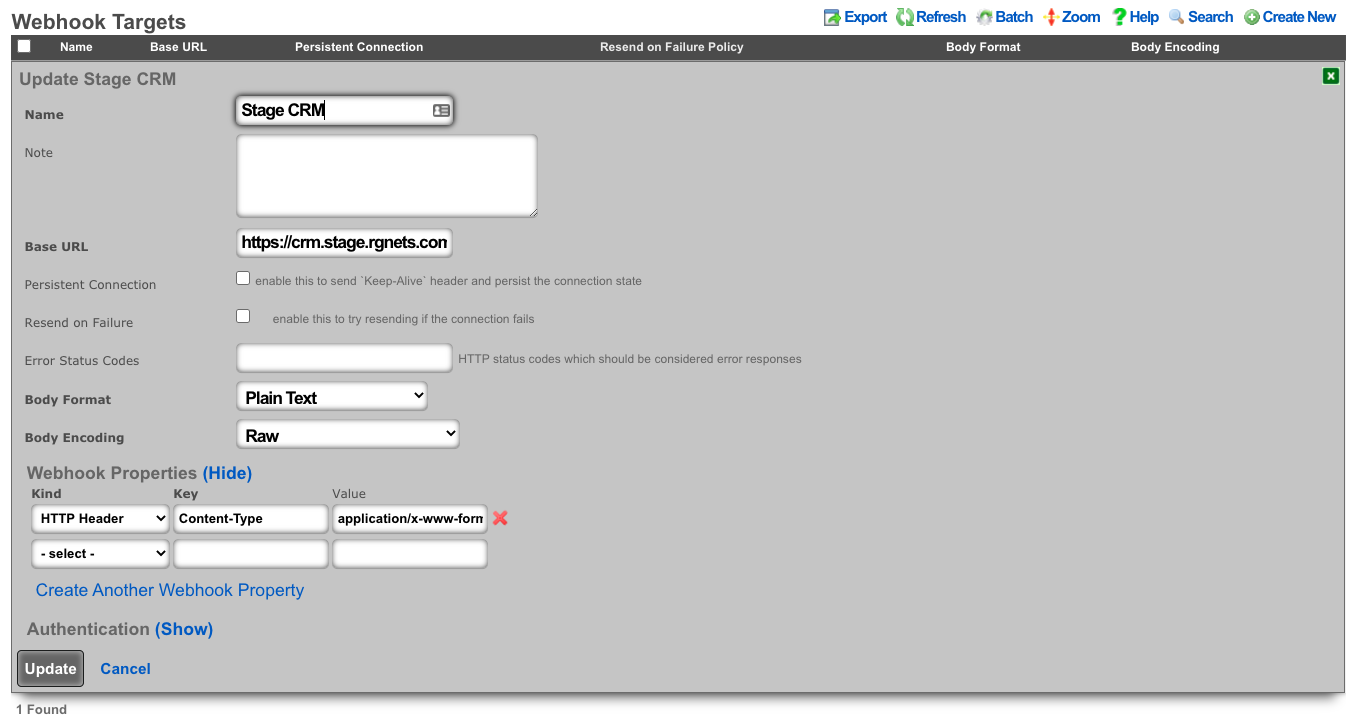

Webhook Targets

An entry in the webhook targets scaffold defines a remote endpoint the rXg should send configured webhooks to.

The name field is an arbitrary string descriptor used only for administrative identification. Choose a name that reflects the purpose of the record. This field has no bearing on the configuration or settings determined by this scaffold.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

The base url field should be configured with the top-level/base URL of the remote API endpoint. This allows for flexibility to have multiple webhooks configured to use the same target.

The persistent connection checkbox configures the rXg to send 'keep-alive' headers and persist the connection state.

The resend on failure checkbox configures the rXg to attempt resending if the initial connection fails.

The error status codes field defines a list of codes that should be considered error responses. The use of wildcard 'x' is available to the operator. Examples include: (400, 401, 4xx, 500, 5xx, 50x).

The body format selector defines the formatting of the body of webhooks configured to use this target. Selecting JSON automatically addsapplication/json to the HTTP Header content-type. SelectingProtobuf requires the operator to define a protobuf schema.

The body encoding selector defines an optional encoding scheme. Options include RAW (no encoding), Base64, and Base64 w/newline every 60 characters.

The webhook properties fields allow the operator to define additional HTTP Headers, or Query Parameters to send globally with all webooks configured to use this target.

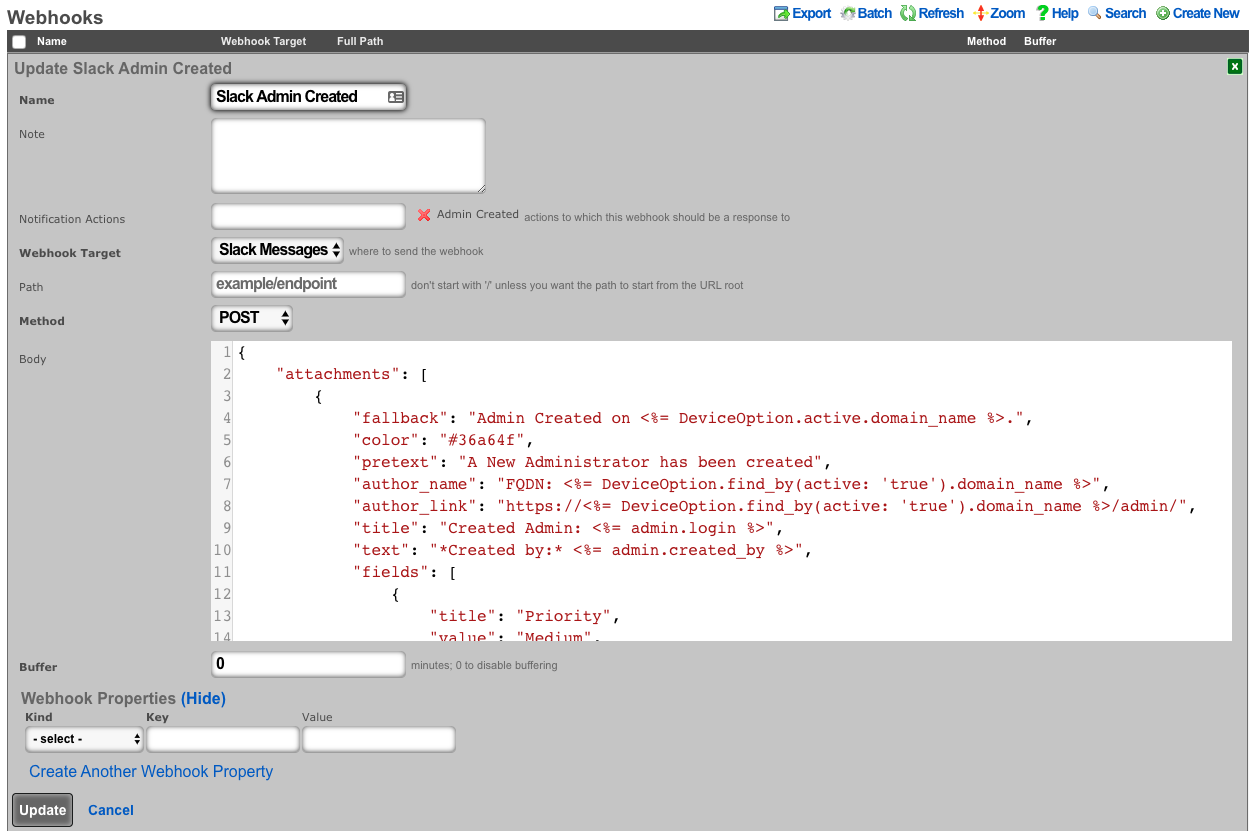

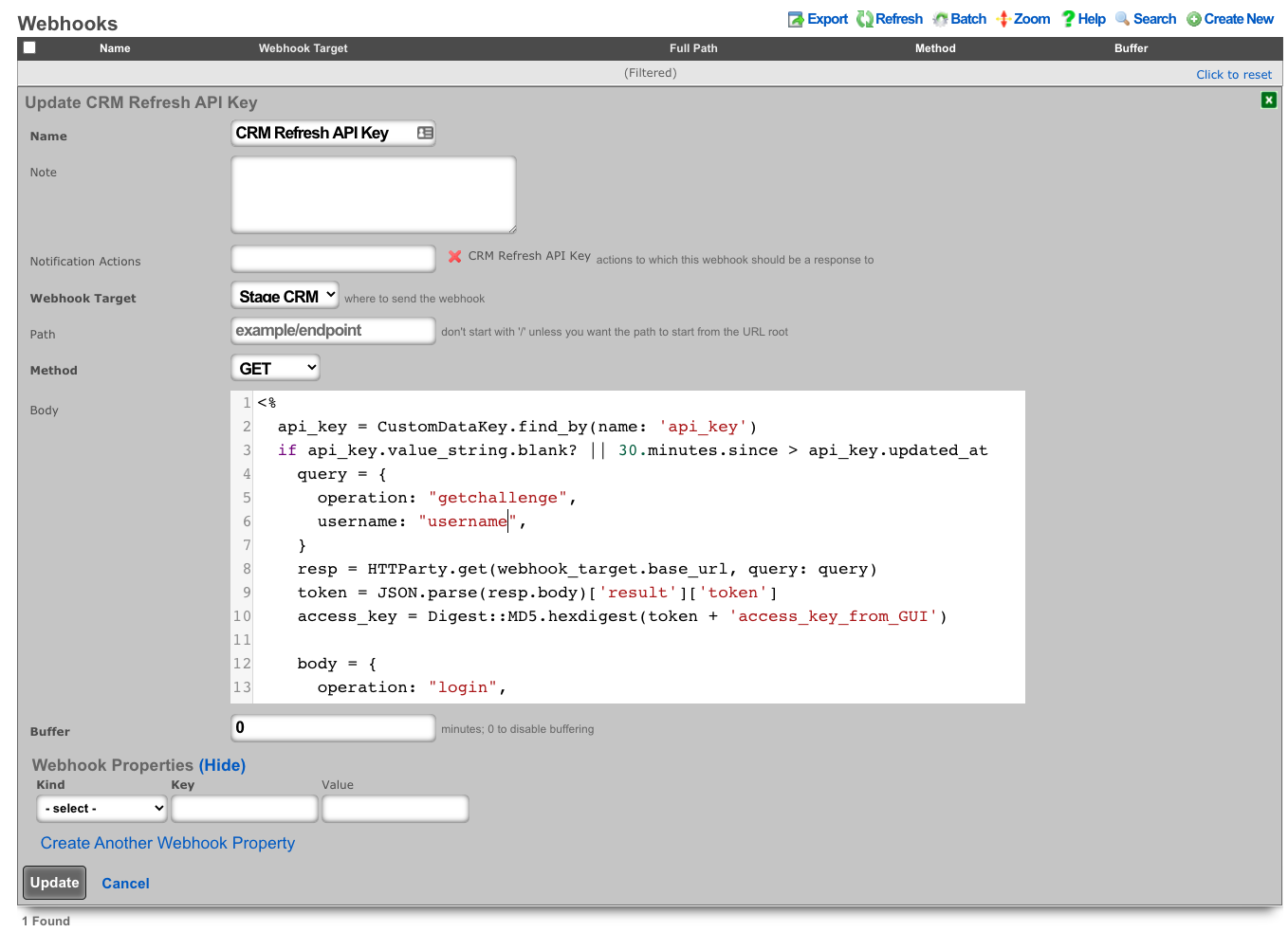

Webhooks

An entry in the webhooks scaffold defines the body, target, method, and additional HTTP Headers and/or Query Parameters for a configured webhook.

The name field is an arbitrary string descriptor used only for administrative identification. Choose a name that reflects the purpose of the record. This field has no bearing on the configuration or settings determined by this scaffold.

The note field is a place for the administrator to enter a comment. This field is purely informational and has no bearing on the configuration settings.

The notification actions field displays what actions this webhook is configured for.

The webhook target dropdown configures the webhook target this webhook will use.

The path field is appended to the base url of the webhook target. This allows multiple webhooks to use the same webhook target, and be configured for unique endpoints.

The body field contains the body of the webhook.

The buffer field configures a time period (in minutes) before sending the webhook. "0" will disable buffering.

The webhook properties selector defines an optional encoding scheme. Options include RAW (no encoding), Base64, and Base64 w/newline every 60 characters.

The webhook properties fields allow the operator to define additional HTTP Headers, or Query Parameters to send only with this individual webhook.

Substitution

Payload fields may contain special keywords surrounded by % signs that will be substituted with relevant values. This enables the operator to deliver values stored in the database as part of the payload.

List of objects available:

| Account Create | Usage Plan Purchase | Transaction: success/failure |

|---|---|---|

| cluster_node | cluster_node | cluster_node |

| custom_email | custom_email | custom_email |

| device_option | device_option | device_option |

| ip_group | login_session | login_session |

| login_session | usage_plan | merchant |

| usage_plan | account | payment_method |

| account | merchant_transaction | |

| usage_plan | ||

| account |

| Credit Card Expiring | Coupon Redemption | Account Charge: success/failure/no response |

|---|---|---|

| cluster_node | cluster_node | cluster_node |

| custom_email | custom_email | custom_email |

| device_option | device_option | device_option |

| login_session | coupon | login_session |

| payment_method | login_session | payment_method |

| usage_plan | usage_plan | response |

| account | account | usage_plan |

| account |

| Trigger: Connections | Trigger: Quota | Trigger: DPI |

|---|---|---|

| cluster_node | cluster_node | cluster_node |

| custom_email | custom_email | custom_email |

| device_option | device_option | device_option |

| login_session | login_session | login_session |

| max_connections_trigger | quota_trigger | snort_trigger |

| transient_group_membership | transient_group_membership | transient_group_membership |

| account | account | account |

| Trigger: Time | Trigger: Log Hits | Health Notice: create |

|---|---|---|

| cluster_node | cluster_node | cluster_node |

| custom_email | custom_email | custom_email |

| device_option | device_option | device_option |

| login_session | login_session | health_notice |

| time_trigger | log_hits_trigger | |

| transient_group_membership | transient_group_membership | |

| account | account |

| Health Notice: cured |

|---|

| cluster_node |

| custom_email |

| device_option |

| health_notice |

List of objects available for all associated record types:

| Aged AR Penalty |

|---|

| cluster_node |

| custom_email |

| device_option |

| aged_ar_penalty |

| login_session |

| payment_method |

| usage_plan |

| account |

List of attributes available for each object:

| account | usage_plan | merchant |

|---|---|---|

| id | id | id |

| type | account_group_id | name |

| login | name | gateway |

| crypted_password | description | login |

| salt | currency | password |

| state | recurring_method | test |

| first_name | recurring_day | note |

| last_name | variable_recurring_day | created_at |

| automatic_login | updated_at | |

| usage_plan_id | note | created_by |

| usage_minutes | created_at | updated_by |

| unlimited_usage_minutes | updated_at | signature |

| usage_expiration | created_by | partner |

| no_usage_expiration | updated_by | invoice_prefix |

| automatic_login | time_plan_id | integration |

| note | quota_plan_id | store_payment_methods |

| logged_in_at | usage_lifetime_time | live_url |

| created_at | absolute_usage_lifetime | pem |

| updated_at | unlimited_usage_lifetime | scratch |

| created_by | no_usage_lifetime | dup_timeout_seconds |

| updated_by | recurring_retry_grace_minutes | |

| mb_up | recurring_fail_limit | |

| mb_down | prorate_credit | |

| pkts_up | permit_unpaid_ar | |

| pkts_down | pms_server_id | |

| usage_mb_up | lock_devices | |

| usage_mb_down | scratch | |

| unlimited_usage_mb_up | max_sessions | |

| unlimited_usage_mb_down | max_devices | |

| company | unlimited_devices | |

| address1 | unlimited_sessions | |

| address2 | usage_lifetime_time_unit | |

| city | max_dedicated_ips | |

| region | pms_guest_match_operator | |

| zip | recurring_lifetime_time | |

| country | recurring_lifetime_time_unit | |

| phone | unlimited_recurring_lifetime | |

| bill_at | sms_gateway_id | |

| lock_version | validation_method | |

| charge_attempted_at | validation_grace_minutes | |

| lock_devices | max_party_devices | |

| relative_usage_lifetime | unlimited_party_devices | |

| scratch | upnp_enabled | |

| portal_message | automatic_provision | |

| max_devices | conference_id | |

| unlimited_devices | ips_are_static | |

| max_sessions | base_price | |

| unlimited_sessions | vtas_are_static | |

| max_dedicated_ips | manual_ar | |

| account_group_id | ||

| email2 | ||

| pre_shared_key | ||

| phone_validation_code | ||

| email_validation_code | ||

| phone_validated | ||

| email_validated | ||

| phone_validation_code_expires_at | ||

| email_validation_code_expires_at | ||

| max_party_devices | ||

| unlimited_party_devices | ||

| nt_password | ||

| upnp_enabled | ||

| automatic_provision | ||

| ips_are_static | ||

| guid | ||

| vtas_are_static | ||

| account_id | ||

| max_sub_accounts | ||

| unlimited_sub_accounts | ||

| approved_by | ||

| approved_at | ||

| pending_admin_approval | ||

| wispr_data | ||

| hide_from_operator |

| payment_method | merchant_transaction | coupon |

|---|---|---|

| id | id | id |

| account_id | account_id | usage_plan_id |

| active | payment_method_id | code |

| company | merchant_id | credit |

| address1 | usage_plan_id | expires_at |

| address2 | amount | note |

| city | currency | created_by |

| state | test | updated_by |

| zip | ip | created_at |

| country | mac | updated_at |

| phone | customer | batch |

| note | scratch | |

| created_at | merchant_string | max_redemptions |

| updated_at | description | unlimited_redemptions |

| created_by | success | |

| updated_by | response_yaml | |

| scratch | created_at | |

| customer_id | updated_at | |

| card_id | created_by | |

| nickname | updated_by | |

| encrypted_cc_number | message | |

| encrypted_cc_number_iv | authorization | |

| encrypted_cc_expiration_month | hostname | |

| encrypted_cc_expiration_month_iv | http_user_agent_id | |

| encrypted_cc_expiration_year | account_group_id | |

| encrypted_cc_expiration_year_iv | subscription_id | |

| encrypted_first_name | ||

| encrypted_first_name_iv | ||

| encrypted_middle_name | ||

| encrypted_middle_name_iv | ||

| encrypted_last_name | ||

| encrypted_last_name_iv | ||

| cc_number | ||

| cc_expiration_month | ||

| cc_expiration_year | ||

| first_name | ||

| middle_name | ||

| last_name |

| login_session | ip_group | device_option |

|---|---|---|

| id | id | id |

| account_id | policy_id | name |

| radius_realm_id | name | active |

| login | priority | device_location |

| ip | note | domain_name |

| mac | created_at | ntp_server |

| expires_at | updated_at | time_zone |

| online | created_by | smtp_address |

| radius_acct_session_id | updated_by | rails_env |

| radius_response | scratch | note |

| radius_class_attribute | created_at | |

| created_at | updated_at | |

| updated_at | created_by | |

| created_by | updated_by | |

| updated_by | smtp_port | |

| bytes_up | smtp_domain | |

| bytes_down | smtp_username | |

| pkts_up | smtp_password | |

| pkts_down | cluster_node_id | |

| usage_bytes_up | scratch | |

| usage_bytes_down | log_rotate_hour | |

| ldap_domain_id | log_rotate_count | |

| radius_realm_server_id | ssh_port | |

| ldap_domain_server_id | country_code | |

| cluster_node_id | disable_hyperthreading | |

| shared_credential_group_id | developer_mode | |

| ip_group_id | sync_builtin_admins | |

| account_group_id | delayed_job_workers | |

| usage_plan_id | log_level | |

| lock_version | max_forked_processes | |

| hostname | soap_port | |

| total_bytes_up | reboot_timestamp | |

| total_bytes_down | reboot_time_zone | |

| total_pkts_up | limit_sshd_start | |

| total_pkts_down | limit_sshd_rate | |

| radius_server_id | limit_sshd_full | |

| radius_request | use_puma_threads | |

| backend_login_at | ||

| http_user_agent_id | ||

| backend_login_seconds | ||

| portal_login_at | ||

| omniauth_profile_id | ||

| encrypted_password | ||

| encrypted_password_iv | ||

| conference_id | ||

| password |

| custom_email | transient_group_membership | time_trigger |

|---|---|---|

| id | id | id |

| name | ip_group_id | account_group_id |

| from | mac_group_id | name |

| subject | account_group_id | mon |

| body | account_id | tues |

| event | ip | wed |

| note | mac | thurs |

| created_by | reason | fri |

| updated_by | expires_at | sat |

| created_at | created_by | sun |

| updated_at | updated_by | start |

| send_to_account | created_at | end |

| scratch | updated_at | note |

| email_recipient | cluster_node_id | created_by |

| include_custom_reports_in_body | max_connections_trigger_id | updated_by |

| attachment_format | quota_trigger_id | created_at |

| custom_event | time_trigger_id | updated_at |

| delivery_method | snort_trigger_id | ip_group_id |

| sms_gateway_id | hostname | mac_group_id |

| reply_to | radius_group_id | scratch |

| ldap_group_id | flush_states | |

| login_session_id | flush_dhcp | |

| log_hits_trigger_id | flush_arp | |

| flush_states | flush_vtas | |

| flush_dhcp | infrastructure_area_id | |

| flush_arp | previous_infrastructure_area_id | |

| flush_vtas | duration | |

| vulner_assess_trigger_id | current_dwell | |

| previous_dwell |

| log_hits_trigger | snort_trigger | max_connections_trigger |

|---|---|---|

| id | id | id |

| ip_group_id | ip_group_id | ip_group_id |

| mac_group_id | name | name |

| name | duration | max_connections |

| note | note | duration |

| log_file | created_by | note |

| duration | updated_by | created_by |

| max_hits | created_at | updated_by |

| window | updated_at | created_at |

| scratch | scratch | updated_at |

| created_by | mac_group_id | scratch |

| updated_by | flush_states | mac_group_id |

| created_at | flush_dhcp | flush_states |

| updated_at | flush_arp | flush_dhcp |

| flush_states | flush_vtas | flush_arp |

| flush_dhcp | flush_vtas | |

| flush_arp | max_duration | |

| flush_vtas | max_mb | |

| period | ||

| active_or_expired | ||

| max_duration_logic | ||

| max_mb_logic |

| quota_trigger | health_notice | cluster_node |

|---|---|---|

| id | id | id |

| account_group_id | cluster_node_id | name |

| name | name | iui |

| usage_mb_down | short_message | database_password |

| usage_mb_down_unit | long_message | note |

| usage_mb_up | cured_short_message | created_by |

| usage_mb_up_unit | cured_long_message | updated_by |

| up_down_logic_operator | severity | created_at |

| note | cured_at | updated_at |

| created_by | created_at | ip |

| updated_by | updated_at | ssh_public_key |

| created_at | created_by | scratch |

| updated_at | updated_by | heartbeat_at |

| radius_group_id | fleet_node_id | data_plane_ha_timeout_seconds |

| ldap_group_id | node_mode | |

| period | cluster_node_team_id | |

| unlimited_period | wal_receiver_pid | |

| duration | wal_receiver_status | |

| unlimited_duration | wal_receiver_receive_start_lsn | |

| scratch | wal_receiver_receive_start_tli | |

| ip_group_id | wal_receiver_received_lsn | |

| mac_group_id | wal_receiver_received_tli | |

| flush_states | wal_receiver_latest_end_lsn | |

| flush_dhcp | wal_receiver_slot_name | |

| flush_arp | wal_receiver_sender_host | |

| flush_vtas | wal_receiver_sender_port | |

| wal_receiver_last_msg_send_time | ||

| wal_receiver_last_msg_receipt_time | ||

| wal_receiver_latest_end_time | ||

| op_cluster_node_id | ||

| priority | ||

| auto_registration | ||

| permit_new_nodes | ||

| auto_approve_new_nodes | ||

| pending_auto_registration | ||

| pending_approval | ||

| control_plane_ha_backoff_seconds | ||

| data_plane_ha_enabled | ||

| upgrading | ||

| enable_radius_proxy |

| aged_ar_penalty |

|---|

| id |

| name |

| amount |

| days |

| suspend_account |

| note |

| created_at |

| updated_at |

| created_by |

| updated_by |

| custom_email_id |

| scratch |

| record_type |

| days_type |

Use Cases

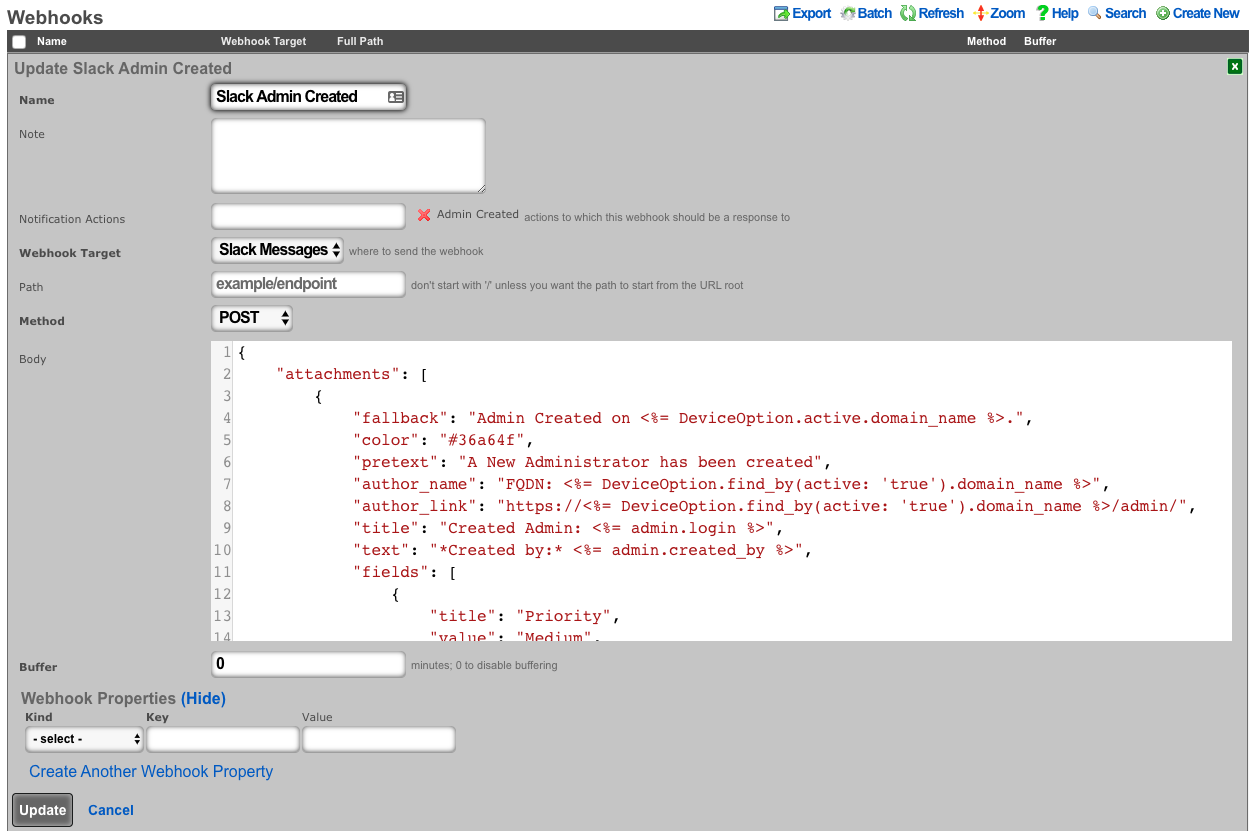

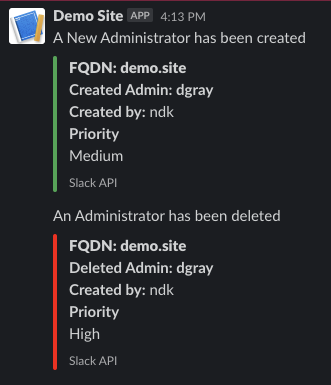

Slack Integration: Notification when an admin is created or deleted

Procedure:

- Use Slack guide to create a new app, and configure incoming webhooks.

Navigate to Services-->Notifications and create a new webhook target.

Insert the webhook address from your slack app as the base URL.

Create a webhook for created admins. An example body is:

{

"attachments": [

{

"fallback": "Admin Created on <%= DeviceOption.active.domain_name %>.",

"color": "#36a64f",

"pretext": "A New Administrator has been created",

"author_name": "FQDN: <%= DeviceOption.active.domain_name %>",

"author_link": "https://<%= DeviceOption.active.domain_name %>/admin/",

"title": "Created Admin: <%= admin.login %>",

"text": "*Created by:* <%= admin.created_by %>",

"fields": [

{

"title": "Priority",

"value": "Medium",

"short": false

}

],

"footer": "Slack API"

}

]

}

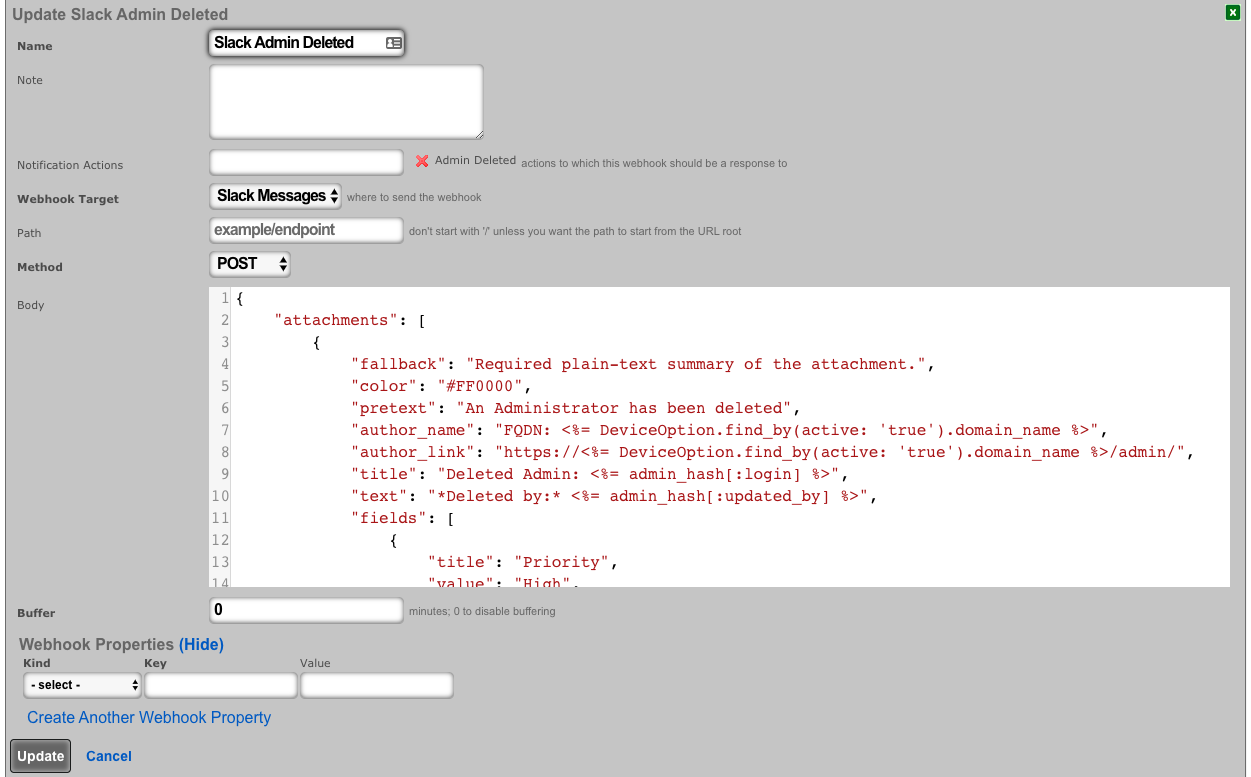

- Create a webhook for deleted admins An example body is: (notice the use of <%= admin_hash %> because the record has been deleted.)

{

"attachments": [

{

"fallback": Admin Deleted on <%= DeviceOption.active.domain_name %>.",

"color": "#FF0000",

"pretext": "An Administrator has been deleted",

"author_name": "FQDN: <%= DeviceOption.find_by(active: 'true').domain_name %>",

"author_link": "https://<%= DeviceOption.find_by(active: 'true').domain_name %>/admin/",

"title": "Deleted Admin: <%= admin_hash[:login] %>",

"text": "*Deleted by:* <%= admin_hash[:updated_by] %>",

"fields": [

{

"title": "Priority",

"value": "High",

"short": false

}

],

"footer": "Slack API"

}

]

}

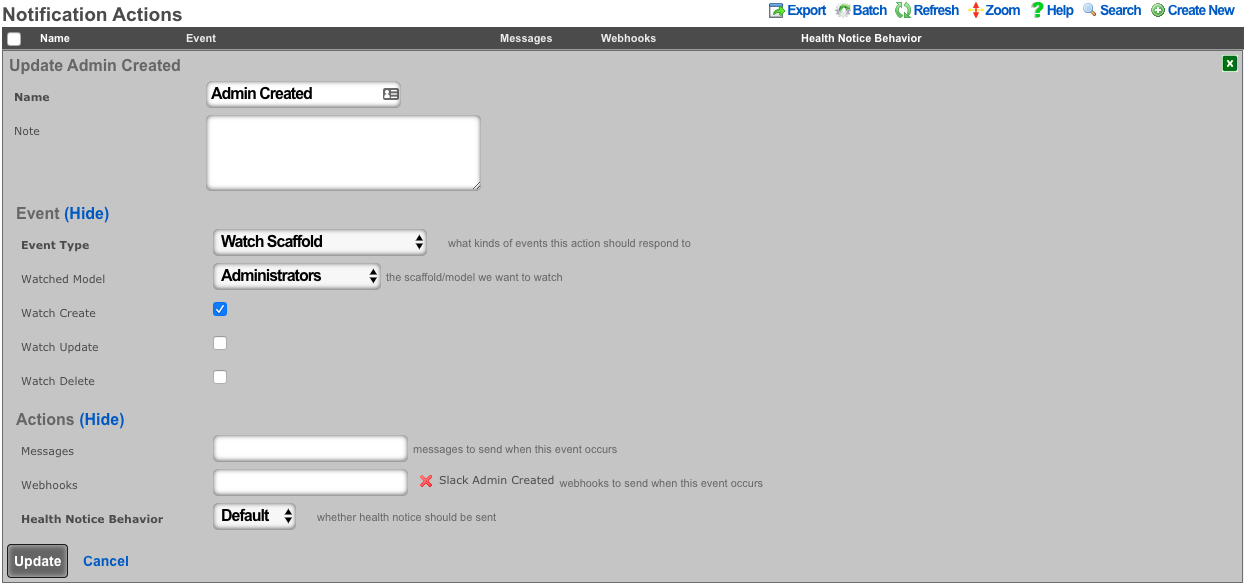

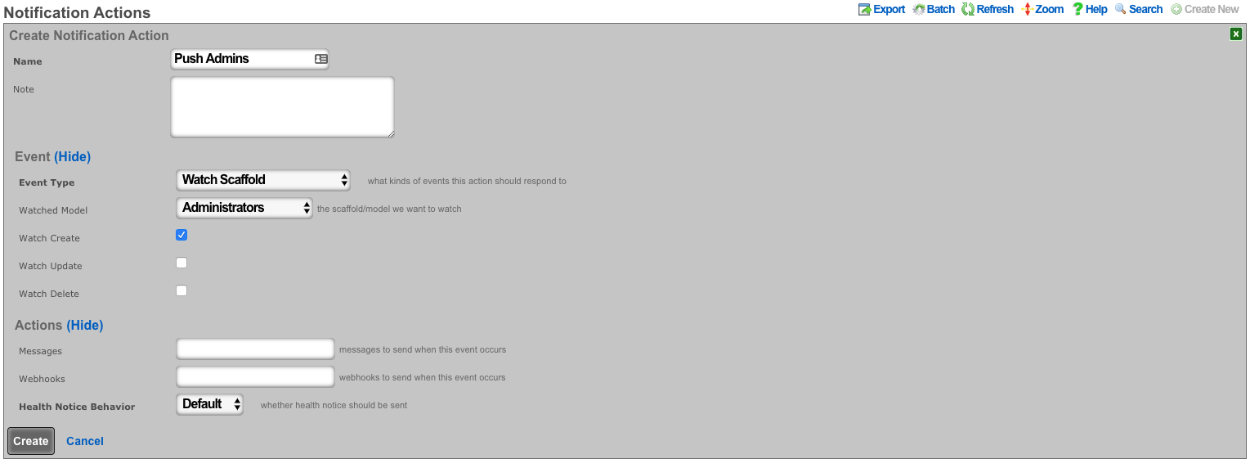

- Create a notification action for created admins.

- Choose Event Type: Watch Scaffold

- Choose Watched Model: Administrators

- Select: Watch Create

- Choose the Admin Created Webhook under actions

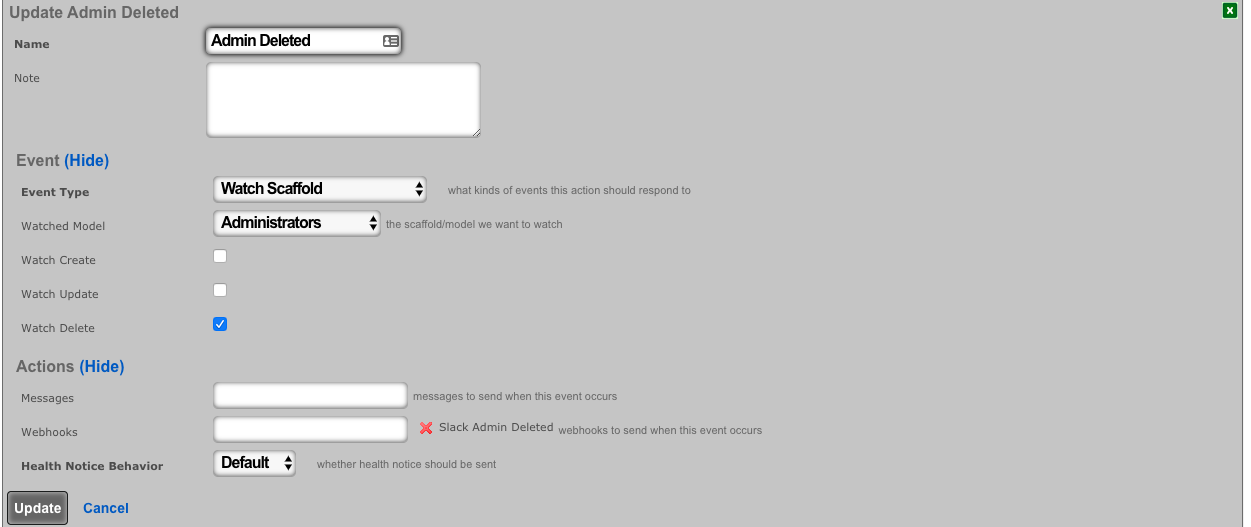

- Create a notification action for deleted admins.

- Choose Event Type: Watch Scaffold

- Choose Watched Model: Administrators

- Select: Watch Delete

- Choose the Admin Deleted Webhook under actions

- Navigate to System-->Admins, and create a new admin. Then delete the new admin.

The channel configured in your Slack app will have messages posted via the rXg Notification Actions subsystem.

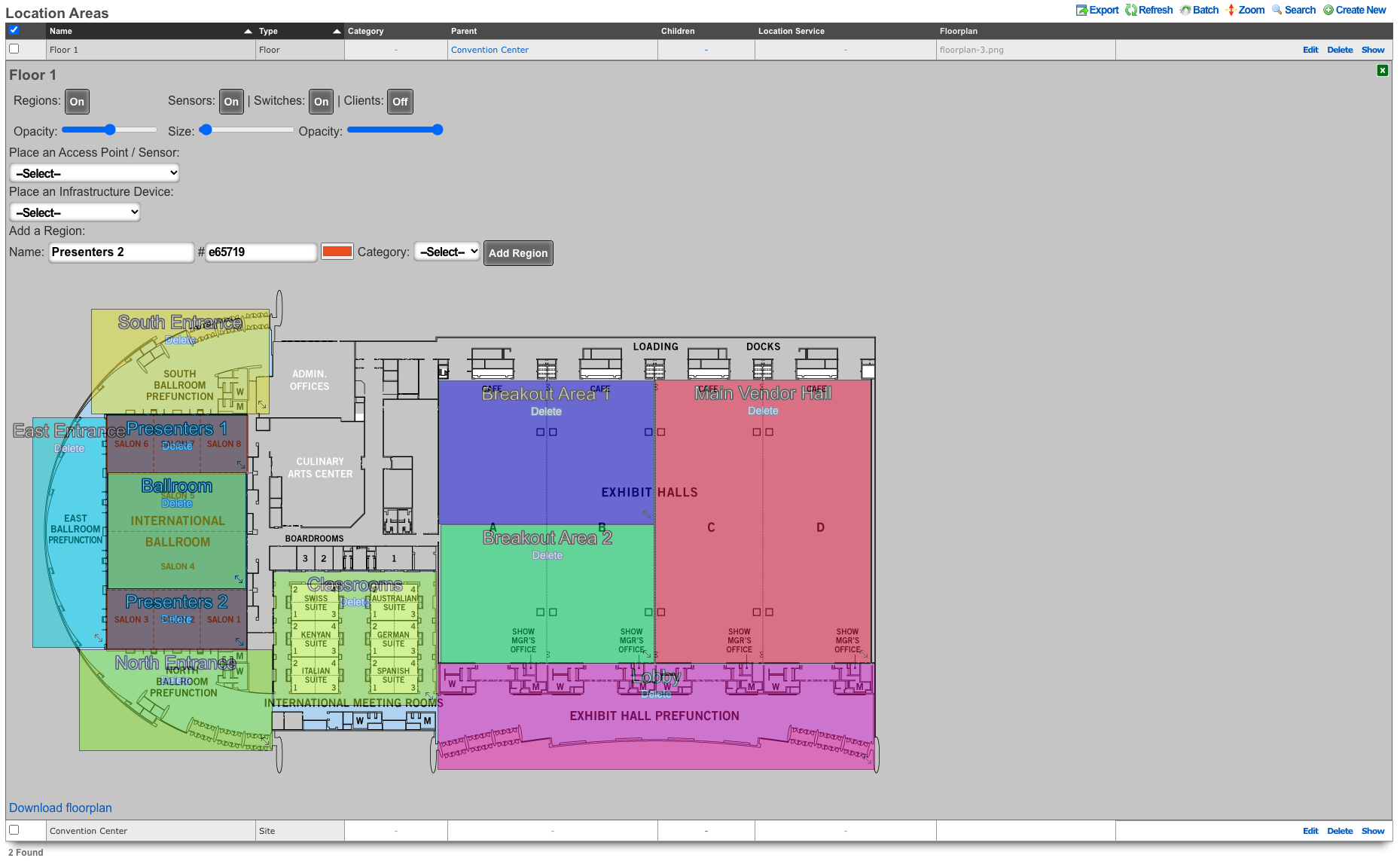

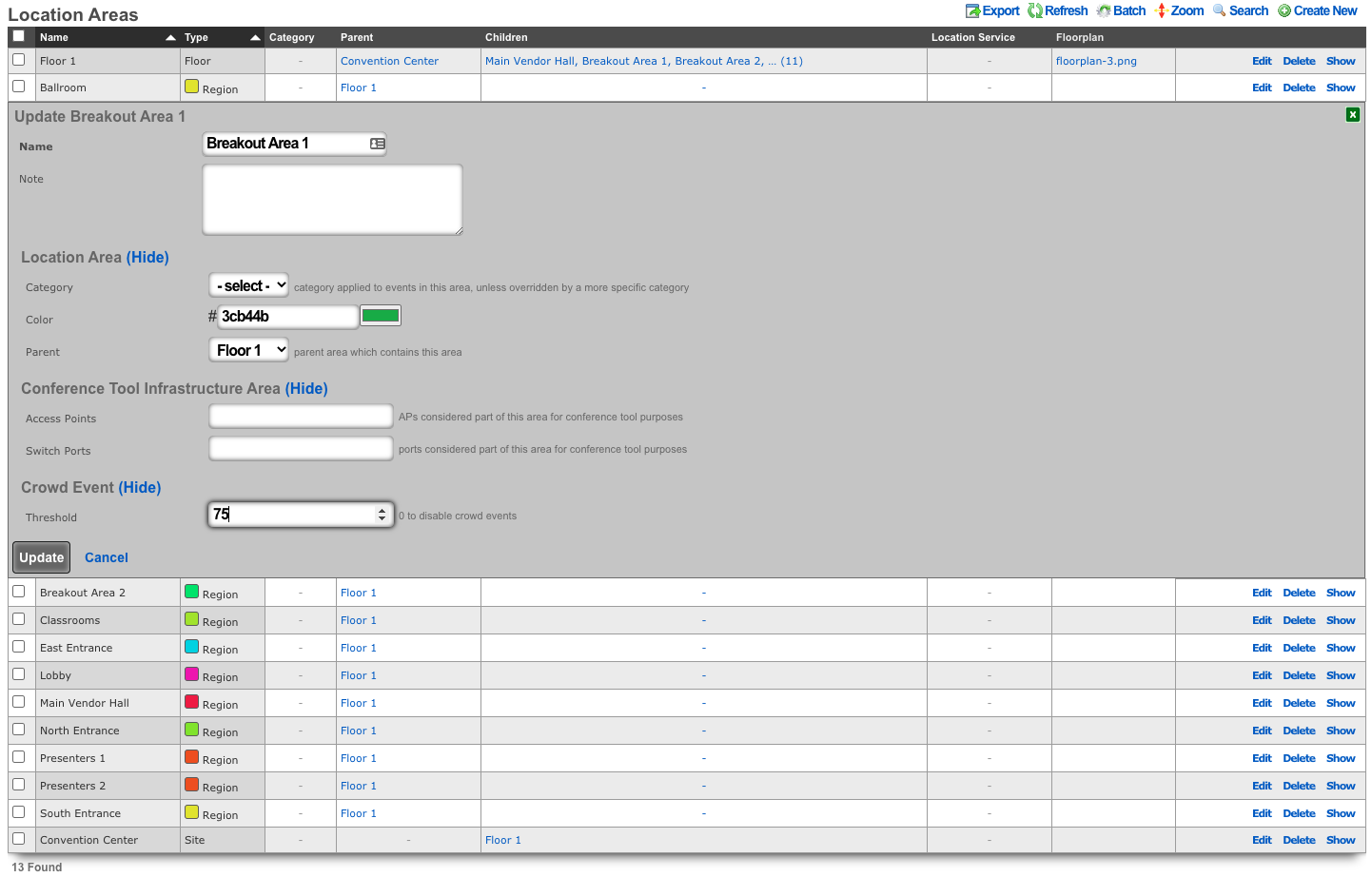

Location Based Crowding Notifications

Procedure:

- Navigate to Network :: Location

Create or edit existing location areas.

Edit individual areas and set crowd event thresholds. Thresholds can be set at region, floor, and site levels.

Navigate to Services :: Notifications

Create a notification action and select desired messages, webhooks and/or health notices to trigger.

Push Account To Remote rXg On Create

Procedure:

- Navigate to Services :: Notifications

Create a new Notification Action

- Event Type set to Watch Scaffold

- Watched Model set to Accounts

- Check the Watch Create checkbox

- Click Create.

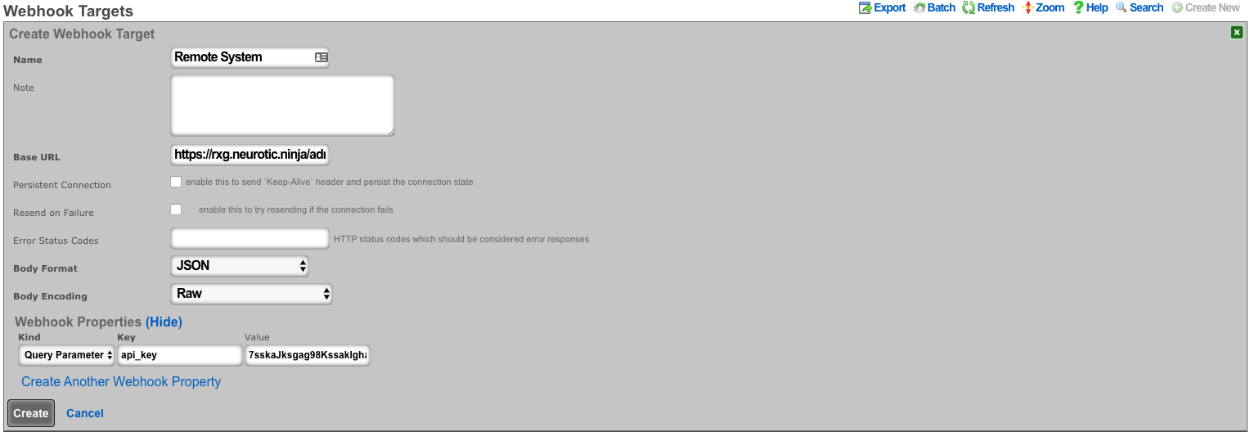

Create a new Webhook Target

- Input a Name

- The base url will be https://FQDN\_of\_remote\_system/admins/scaffolds/

- Select body format, JSON in this case, and Body Encoding as RAW

- Add Webhook Property, Kind = Query Paramater, Key = api_key, the value will be the API key of an admin on remote system

- Click Create

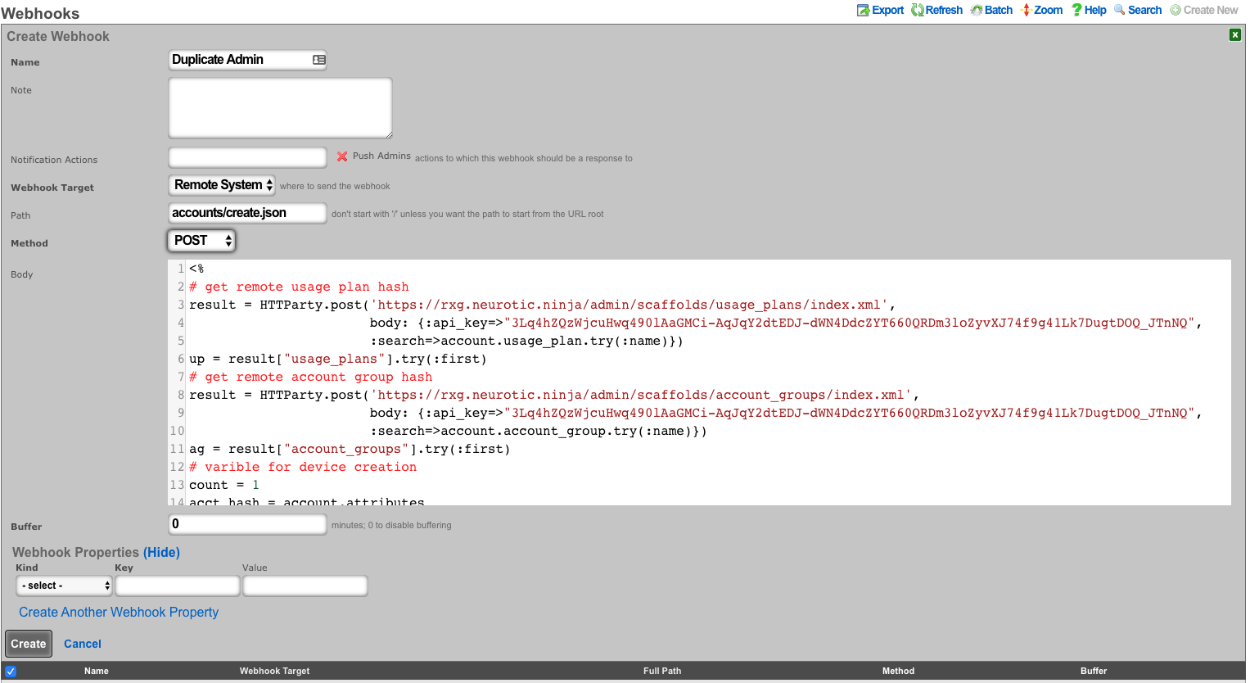

Create a new Webhook

- Input a name

- Select Notification action created in step 1

- Select the webhook target created above

- Set path to accounts/create.json

- Set Method to POST

- Enter code into body (example below), click create.

<%

# get remote usage plan hash

result = HTTParty.post('https://FQDN_of_remote_system/admin/scaffolds/usage_plans/index.xml',

body: {:api_key=>"REMOTE_ADMIN_API_KEY",

:search=>account.usage_plan.try(:name)})

up = result["usage_plans"].try(:first)

# get remote account group hash

result = HTTParty.post('https://FQDN_of_remote_system/admin/scaffolds/account_groups/index.xml',

body: {:api_key=>"REMOTE_ADMIN_API_KEY",

:search=>account.account_group.try(:name)})

ag = result["account_groups"].try(:first)

# varible for device creation

count = 1

acct_hash = account.attributes

# remove attrs we dont want to push

acct_hash.delete("id")

acct_hash.delete("usage_plan_id")

acct_hash.delete("account_group_id")

acct_hash.delete("type")

acct_hash.delete("created_at")

acct_hash.delete("updated_at")

acct_hash.delete("created_by")

acct_hash.delete("updated_by")

acct_hash.delete("mb_up")

acct_hash.delete("mb_down")

acct_hash.delete("crypted_password")

acct_hash.delete("salt")

# make temporary password

acct_hash["password"] = acct_hash["password_confirmation"] = acct_hash["pre_shared_key"]

# add usage plan and do_apply_plan to hash if found on remote system

if up

acct_hash["usage_plan"] = up["id"]

acct_hash["do_apply_usage_plan"] = 1

end

# add account group to hash if found on remote system

if ag

acct_hash["account_group"] = ag["id"]

end

# add all devices to account hash

acct_hash["devices"] = {}

account.devices.each do |device|

acct_hash["devices"]["device#{count.to_s}"] = {

"name" => device.name,

"mac" => device.mac

}

count += 1

end

%>

{

"record": <%= acct_hash.to_json %>

}

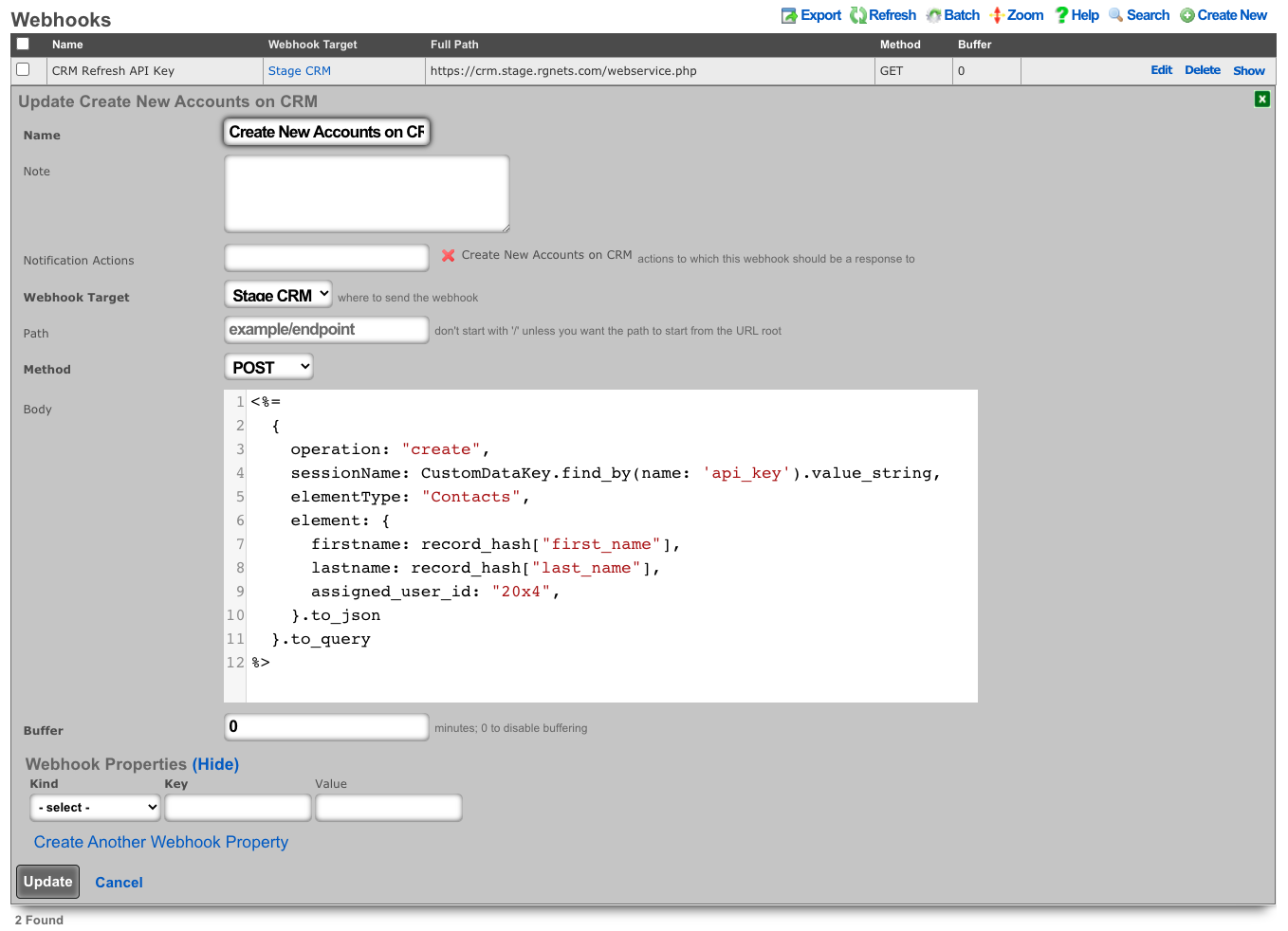

CRM Integration

This example will explore integrating the rXg with the community edition of vTiger CRM. This case demonstrates using an API requiring a multistep login process, and use of a disposable session key rather than static credentials.

Procedure:

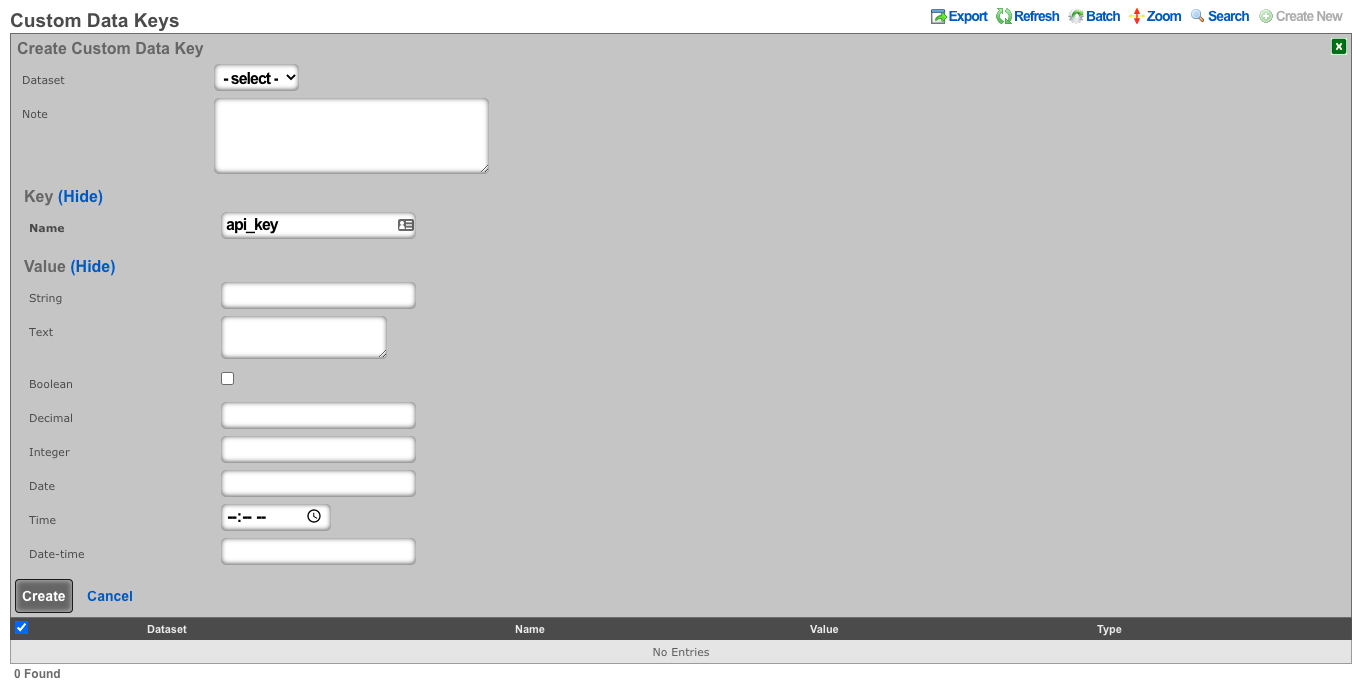

- The community edition of vTiger uses a combination of a challenge token, username, and access key to generate an expiring session key to be used with API calls. Create a custom data key under System :: Portals as a place to store the key.

- The only necessary field to populate is the name.

- Create a notification action to periodically get the session key, and perform a log in to the CRM.

- Set the event type to periodic.

- Choose a time within the session key expiration (the webhook will contain logic to determine if a refresh is necessary).

- Create a webhook target for the CRM.

- Add an HTTP header of "Content-Type": "application/x-www-form-urlencoded"

- Create a webhook that uses embedded ruby to store the current session key in the previously defined custom data key. This example looks at the age of the current key to see if a new key is necessary before executing.

- Choose the previously created notification action.

- Choose the CRM webhook target.

- Example Body:

<%

api_key = CustomDataKey.find_by(name: 'api_key')

if api_key.value_string.blank? || 30.minutes.since > api_key.updated_at

query = {

operation: "getchallenge",

username: "username",

}

resp = HTTParty.get(webhook_target.base_url, query: query)

token = JSON.parse(resp.body)['result']['token']

access_key = Digest::MD5.hexdigest(token + 'access_key_from_GUI')

body = {

operation: "login",

username: "username",

accessKey: access_key,

}

login = HTTParty.post(webhook_target.base_url, body: body)

session_id = JSON.parse(login.body)['result']['sessionName']

api_key.value_string = session_id

api_key.save!

end

raise DoNotSendError

%>

- Create a notfication action to trigger when a new account is created.

- Choose the event type: Watch Scaffold

- Choose the watched model: Accounts

- Check: Watch Create

- Create a webhook to create the account on the CRM.

- Select the previously created notification action.

- Choose the CRM webhook target.

- Set the method to POST

- Example Body:

<%=

{

operation: "create",

sessionName: CustomDataKey.find_by(name: 'api_key').value_string,

elementType: "Contacts",

element: {

firstname: record_hash["first_name"],

lastname: record_hash["last_name"],

assigned_user_id: "20x4",

}.to_json

}.to_query

%>

- Because vTiger CRM expects the body of the HTTP POST to be url-encoded the body is enclosed in ERB tags "<%= ... %>" so certain methods can be used to make formatting easier.

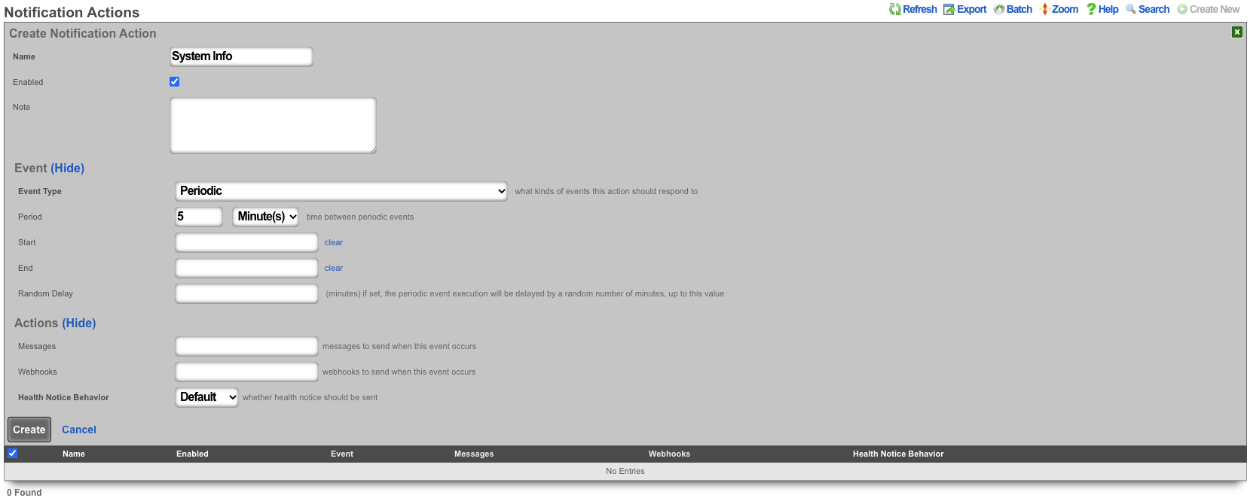

Webhook to retrieve System Info

In this example will show how to setup a Webhook to push System Info on a periodic interval.

Procedure:

- Create a new Notification Action

- Populate the name field with the desired name.

- Set the Event Type field to Periodic.

- Specify how often the action should run using the Period field. Default is 5 minutes.

- Click Create.

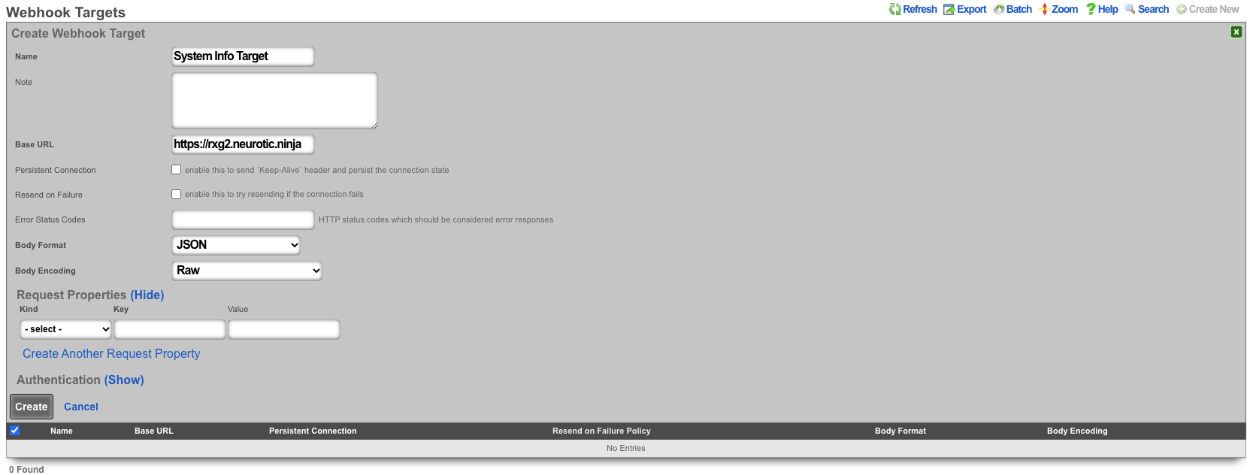

- Create a new Webhook Target

- Populate the name field with the desired name.

- Specify the URL of the target using the Base URL field.

- Click Create.

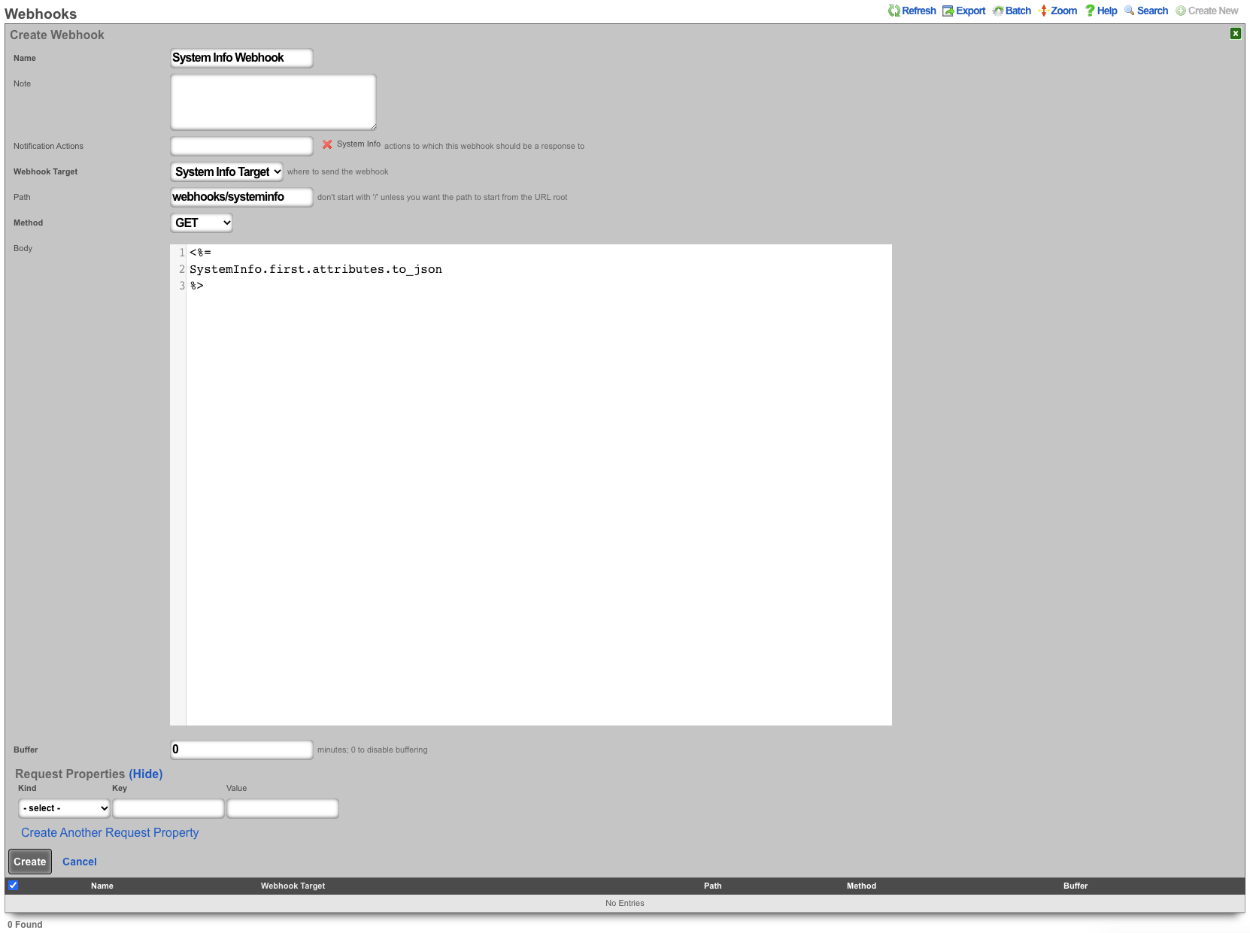

- Create a new Webhook

- Populate the name field with the desired name.

- Use the Notification Actions field to select the notification action created in step 1.

- Select the webhook target created in step 2 in the Webhook Target field.

- Set the path in the Path field.

- The Body field is where we will put our payload.

\<%=

SystemInfo.first.attributes.to\_json

%\>

- Click Create.

This will pass the following information.

{

"id": 1048577,

"cluster_node_id": null,

"baseboard_asset_tag": "Not Specified",

"baseboard_manufacturer": "Intel Corporation",

"baseboard_product_name": "440BX Desktop Reference Platform",

"baseboard_serial_number": "None",

"baseboard_version": "None",

"bios_vendor": "Phoenix Technologies LTD",

"bios_version": "6.00",

"chassis_asset_tag": "No Asset Tag",

"chassis_manufacturer": "No Enclosure",

"chassis_serial_number": "None",

"chassis_type": "Other",

"chassis_version": "N/A",

"disk_device": "NECVMWar VMware SATA CD00 1.00",

"hostname": "rxg.example.com",

"os_arch": "amd64",

"os_branch": "RELEASE",

"os_kernel": "FreeBSD 12.2-RELEASE-p9 #16 476551a338d",

"os_name": "FreeBSD",

"os_release": "12.2-RELEASE-p9 #16",

"os_version": "12.2",

"processor_family": "Unknown",

"processor_frequency": "2300 MHz",

"processor_manufacturer": "GenuineIntel",

"processor_version": "Intel(R) Xeon(R) D-2146NT CPU @ 2.30GHz",

"rxg_build": "12.999",

"system_manufacturer": "VMware, Inc.",

"system_product_name": "VMware Virtual Platform",

"system_serial_number": "VMware-56 4d fd 29 e4 1a c1 4e-a2 60 81 57 54 ff dd 51",

"system_uuid": "29fd4d56-1ae4-4ec1-a260-815754ffdd51",

"system_version": "None",

"disk_total_gb": 111,

"memory_free_mb": 3874,

"memory_total_mb": 8192,

"memory_used_mb": 4317,

"processor_count": 4,

"uptime": 85371,

"load_avg_15m": "0.78",

"load_avg_1m": "1.09",

"load_avg_5m": "0.84",

"processor_temp": "0.0",

"bios_release_date": "2018-12-12",

"booted_at": "2021-08-30T08:37:23.000-07:00",

"created_by": "rxgd(InstrumentVitals)",

"updated_by": "rxgd(InstrumentVitals)",

"created_at": "2021-08-11T08:38:58.800-07:00",

"updated_at": "2021-08-31T08:20:14.808-07:00",

"rxg_iui": "4 2290 8192 111 ZKORHJVPDUQUZUNIBEWLHDFQ GZQQJUTGUDOIFDNTDUFQSKTW NLDQYHFARJWE",

"system_family": "Not Specified",

"fleet_node_id": null

}

Custom Modules

Custom modules are reusable Ruby code libraries that can be shared across multiple custom emails, custom portals, operator portals, webhooks, and backend scripts. They provide a way to define common functionality once and reuse it across different notification contexts.

Overview

A custom module is a Ruby code snippet stored in the custom modules scaffold that can be associated with multiple records. When a custom email is rendered, a webhook is executed, or a backend script runs, any associated custom modules are automatically loaded and made available to the template or script.

Creating a Custom Module

To create a custom module:

- Navigate to Services Notifications Custom Modules

- Click Create New

- Provide a unique name for the module

- Enter Ruby code in the body field

- Optionally add a note for documentation purposes

The body field should contain valid Ruby code that will be evaluated in the context where it's used. This can include:

- Helper methods

- Class definitions

- Constants

- Utility functions

Example custom module body:

# Helper method to format currency

def format_currency(amount)

sprintf("$%.2f", amount)

end

# Helper method to calculate revenue for a time period

def revenue_for_period(hours_ago)

ArTransaction.where(['created_at > ?', hours_ago.hours.ago]).sum(:credit)

end

Associating Custom Modules

Custom modules can be associated with:

- Custom Emails (custom messages scaffold)

- Custom Portals

- Operator Portals

- Webhooks

- Backend Scripts

When editing any of these records, select one or more custom modules from the custom modules field. The selected modules will be automatically loaded when the record is executed or rendered.

How Custom Modules Work

Custom Emails

When a custom email is rendered (either for delivery or preview), associated custom modules are automatically loaded and made available to the email template. You can use the helper methods and classes defined in your custom modules directly in the email body.

Example custom email using a custom module:

<p>Good Morning,</p>

<p>Your network generated <%= format_currency(revenue_for_period(24)) %> in the last 24 hours.</p>

If you need to explicitly load a module (for example, to load it with a specific binding context), you can use:

<% CustomModule.load('module_name', binding) %>

Webhooks

Associated custom modules are automatically loaded before the webhook body is evaluated. The modules are evaluated in the webhook's binding context, making all defined methods and classes available.

Example webhook body using a custom module:

{

"daily_revenue": "<%= format_currency(revenue_for_period(24)) %>",

"weekly_revenue": "<%= format_currency(revenue_for_period(168)) %>"

}

Backend Scripts

Associated custom modules are automatically loaded before the backend script executes. The modules are evaluated in the script's execution context.

Example backend script using a custom module:

# Custom modules are already loaded at this point

puts "Revenue: #{format_currency(revenue_for_period(24))}"

Custom Portals and Operator Portals

Associated custom modules are loaded when the portal is rendered, making helper methods available to portal templates and embedded Ruby code.

Manual Loading

In some contexts, you may want to manually load a custom module. Use the CustomModule.load class method:

# Load a module by name

CustomModule.load('my_module')

# Load a module with a specific binding context

CustomModule.load('my_module', binding)

The second parameter allows you to specify the binding context where the module should be evaluated. This is useful when you need the module's code to have access to specific local variables or instance variables.

Best Practices

- Keep modules focused: Each custom module should have a single, well-defined purpose

- Use descriptive names: Choose module names that clearly indicate their functionality

- Document your code: Use comments in the module body to explain complex logic

- Test thoroughly: Use the preview/trigger functionality to test modules before deploying

- Avoid global state: Custom modules should not rely on or modify global variables when possible

- Handle errors gracefully: Include error handling in your module code to prevent failures

Example Use Cases

Revenue Reporting Module:

# Name: revenue_helpers

def daily_revenue

ArTransaction.where(['created_at > ?', 24.hours.ago]).sum(:credit)

end

def format_currency(amount)

sprintf("$%.2f", amount)

end

User Statistics Module:

# Name: user_stats

def active_users_count

Account.where(['logged_in_at > ?', 7.days.ago]).count

end

def new_users_today

Account.where(['created_at > ?', 1.day.ago]).count

end

Network Health Module:

# Name: network_health

def uplink_status

# Custom logic to determine uplink health

PingTarget.where(name: 'Primary Uplink').first&.online? ? 'Online' : 'Offline'

end

Troubleshooting

Module not found error: - Verify the module name is spelled correctly - Ensure the module is associated with the record - Check that the module exists in the custom modules scaffold

Undefined method error: - Verify the method is defined in the associated custom module - Check that the module is being loaded before the method is called - Ensure there are no syntax errors in the module body

Syntax errors: - Use the Ruby console to test module code before saving - Check the module body for proper Ruby syntax - Review error messages in health notices or logs

Backend Scripts

The backend script allows the operator to write custom ruby scripts that can be used for things like interacting with mobile applications or performing a configuration change on a regular schedule. From the Notification Actions scaffold you can choose a trigger for the script from the drop down list. Some examples included manual, periodic, errors, and thresholds.

Send SNMP Trap via BackEnd Script

As an example, when a change in the Ping Target scaffold is detected, a custom SNMP trap can be sent to a destination (SNMP trap collector) of choice, using a custom enterprise ID, relaying event time and specific action (interface up/down).

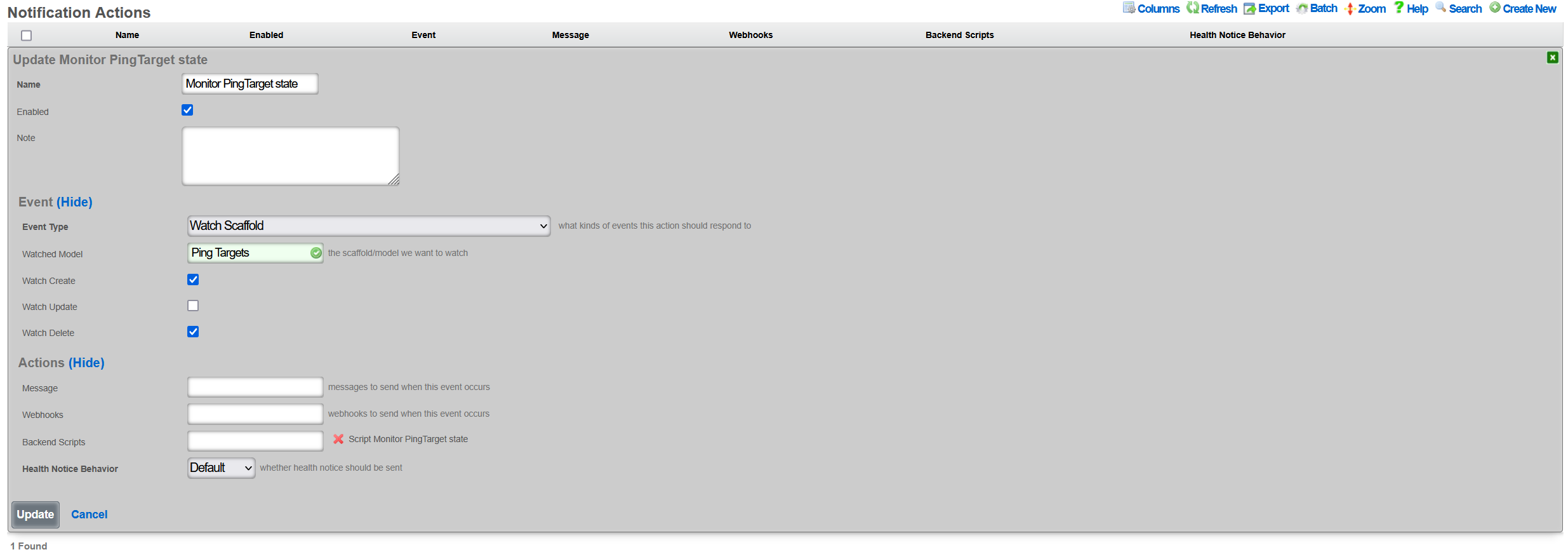

To create a new Notification Action, the Services::Notifications::Notification Actions scaffold is used, and a following entry is created: * Name field contains an arbitrary name for this particular notification action entry, * Enabled field is checked, to make sure the action is indeed active * Event Type is configured as Watch Scaffold, which essentially watches and notifies the action about any changes in the given scaffold * Watch Model indicates which of the system scaffolds are to be watched for changes - in this case, the Ping Target scaffold is selected * Watch Create and Watch Delete fields are marked, in which case both the up and down events for each and every Ping Target is generated

All other fields are left in their default state and not modified.

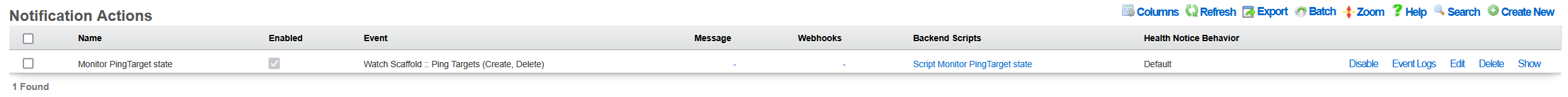

Once created, a new entry in the Services::Notifications::Notification Actions scaffold becomes visible, as shown below.

The given action can be disabled, if needed, without being permanently deleted. Also, it is possible to track the execution of the given action using the Event Logs action, which will display any execution errors for the given script, when such errors take place.

There are many event types that can be created, including periodic execution, manual execution, scaffold status changes, trigger on general error conditions, threshold events, and others. Available event configuration options depend on the given event type.

Once the Notification Event is created, the associated Backend Script needs to be created. To create a new Backend Script, the Services::Notifications::Backend Scripts scaffold is used, and a following entry is created with the following fields to be filled in: * Name is an arbitrary name for the backend script, * Notification Actions indicates which of the available actions this script is tied to; in this case, the previously created PING Target SNMP trap is selected * Body is filled in with the target execution script, which in this case sends a SNMPv1 trap to a specific target, with an indication of whether the given PING Target is online or offline, depending on the status of the given ping_target. The script uses Ruby, and no other scripting languages are supported at this time.

The example of the SNMP Trap sending Ruby script is shown below.

require 'snmp'

# for T/S purposes only

puts "Script started at #{Time.current}"

var_trap_agent = "192.168.150.4" # target SNMP agent

var_trap_enterprise_id = 666 # per https://www.iana.org/assignments/enterprise-numbers/

SNMP::Manager.open(:Host => var_trap_agent, :Version => :SNMPv1) do |snmp|

snmp.trap_v1(

"enterprises.#{var_trap_enterprise_id}",

ping_target.target,

ping_target.online ? :linkUp : :linkDown, # send linkUp/Down event type depending on the status of the given PingTarget

42,

Time.current

)

end

# for T/S purposes only

puts "Script completed at #{Time.current}"

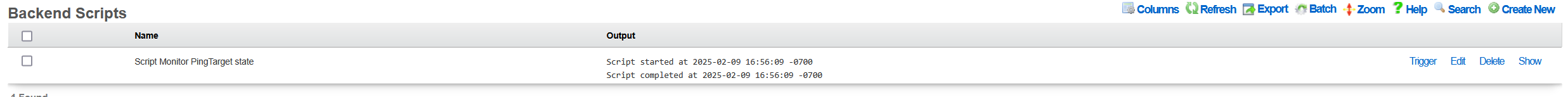

Once created, a new entry in the Services::Notifications::Backend Scripts scaffold becomes visible, as shown below.

Note that it is possible to test the given created backend script, using the Trigger action associated with the given script. However, when internal rXg objects are used in the script (ping_target in the example used in this KB), the Trigger action will result in execution errors due to the object missing in the scope. Internal rXg objects become available only when triggered through the associated Notification Action.

For reference, the structure of the ping_target object is as follows, showing a number of fields which can be used for internal scripting purposes. Individual objects can be previewed in the rXg shell using the Ruby console.

<PingTarget:0x000038a380ef50b8

id: 5,

name: "9.9.9.9",

target: "9.9.9.9",

timeout: 0.3e1,

attempts: 6,

online: true,

note: nil,

created_by: "adminuser",

updated_by: "rxgd(PingMonitor)",

created_at: Thu, 30 Jan 2025 09:24:53.456740000 PST -08:00,

updated_at: Thu, 30 Jan 2025 10:40:38.619050000 PST -08:00,

cluster_node_id: nil,

scratch: nil,

rtt_tolerance: nil,

jitter_tolerance: nil,

packet_loss_tolerance: nil,

wlan_id: nil,

interval: 0.3e1,

psk_override: nil,

wireguard_tunnel_id: nil,

traceroute_interval: nil,

fom_configurable: false>

To trigger the condition from the rXg shell without having to wait for the Ping Target timeout, execute the following command set in the repl (Perl) console.

my $pt = Rxg::ActiveRecord::PingTarget->last(); $pt->flipBooleanAttribute('online'); $pt->saveAndTrigger(); Rxg::ActiveRecord->commitDeferredSql()

Every time the script is executed, the last Ping Target state is flipped and SNMP traps are generated, as shown on SNMP trap collector below. The linkUp/linkDown event is showing up in the log in a quick succession.

#00:17:46.091437 08:62:66:48:67:83 > bc:24:11:01:8f:af, ethertype IPv4 (0x0800), length 87: (tos 0x0, ttl 64, id 24299, offset 0, flags [none], proto UDP (17), length 73)

192.168.150.38.30776 > 192.168.150.4.162: [udp sum ok] { SNMPv1 { Trap(30) .1.3.6.1.4.1.57404 9.9.9.9 linkDown[specific-trap(42)!=0] 1738369066 } }

#00:18:35.113910 08:62:66:48:67:83 > bc:24:11:01:8f:af, ethertype IPv4 (0x0800), length 87: (tos 0x0, ttl 64, id 37016, offset 0, flags [none], proto UDP (17), length 73)

192.168.150.38.48770 > 192.168.150.4.162: [udp sum ok] { SNMPv1 { Trap(30) .1.3.6.1.4.1.57404 9.9.9.9 linkUp[specific-trap(42)!=0] 1738369115 } }

#00:18:47.357938 08:62:66:48:67:83 > bc:24:11:01:8f:af, ethertype IPv4 (0x0800), length 87: (tos 0x0, ttl 64, id 57315, offset 0, flags [none], proto UDP (17), length 73)

192.168.150.38.29247 > 192.168.150.4.162: [udp sum ok] { SNMPv1 { Trap(30) .1.3.6.1.4.1.57404 9.9.9.9 linkDown[specific-trap(42)!=0] 1738369127 } }

#00:18:49.443970 08:62:66:48:67:83 > bc:24:11:01:8f:af, ethertype IPv4 (0x0800), length 87: (tos 0x0, ttl 64, id 24438, offset 0, flags [none], proto UDP (17), length 73)

192.168.150.38.33871 > 192.168.150.4.162: [udp sum ok] { SNMPv1 { Trap(30) .1.3.6.1.4.1.57404 9.9.9.9 linkUp[specific-trap(42)!=0] 1738369129 } }

#00:18:53.628644 08:62:66:48:67:83 > bc:24:11:01:8f:af, ethertype IPv4 (0x0800), length 87: (tos 0x0, ttl 64, id 37059, offset 0, flags [none], proto UDP (17), length 73)

192.168.150.38.26210 > 192.168.150.4.162: [udp sum ok] { SNMPv1 { Trap(30) .1.3.6.1.4.1.57404 9.9.9.9 linkDown[specific-trap(42)!=0] 1738369133 } }

The recorded packets indicate that the rXg (at 192.168.150.38 in this case) sends SNMP traps towards the collector at 192.168.150.4 with the appropriate structure, changing between the target up and down states, as expected.

Big Red Button Mobile Application

This example will show how to setup a backend script that can be triggered by an API call executed by a mobile application which is available in the Apple and Google Play app stores.

This example assumes that you have an existing WLAN created under the WLANs scaffold with a record name of arena.

Procedure:

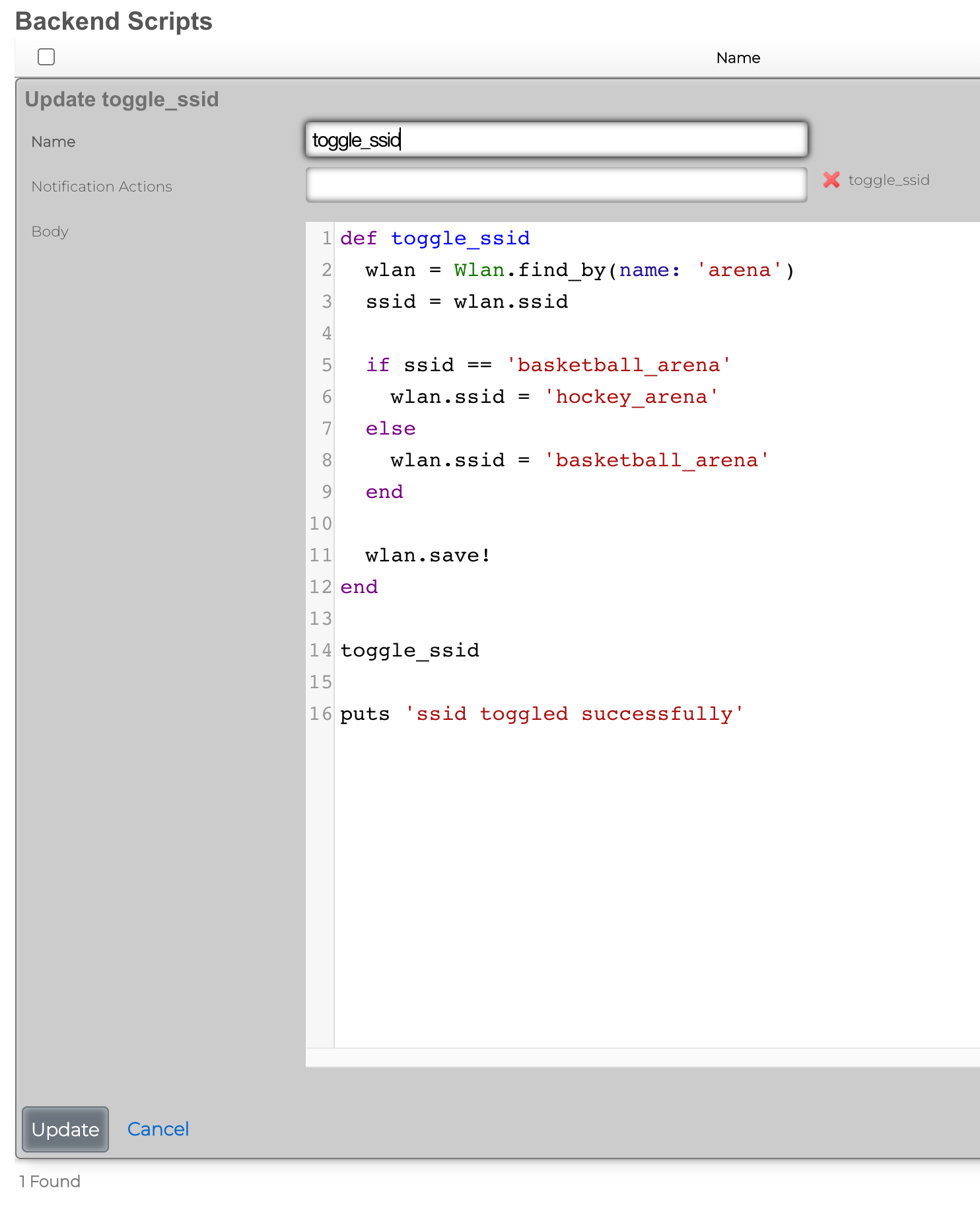

1) Browse to Services >> Notifications >> Backend Scripts 2) Click Create New 3) Name the backend script toggle_ssid. 4) Paste the following script into the body and click create.

def toggle_ssid wlan = Wlan.find_by(name: 'arena') ssid = wlan.ssid

if ssid == 'basketball_arena' wlan.ssid = 'hockey_arena' else wlan.ssid = 'basketball_arena' end

wlan.save! end

toggle_ssid

puts 'ssid toggled successfully'

5) Browse to Services >> Notifications >> Notification Actions 6) Click Create New 7) Name the notification action toggle_ssid. 8) Set the event type field to Manual. 9) Select toggle_ssid from the available scripts in the list.

Install the rXg Action Button Mobile App

Apple: https://apps.apple.com/us/app/rxg-action-button/id1483547358 Android: https://play.google.com/store/apps/details?id=net.lok.aidan.rxg_action_button

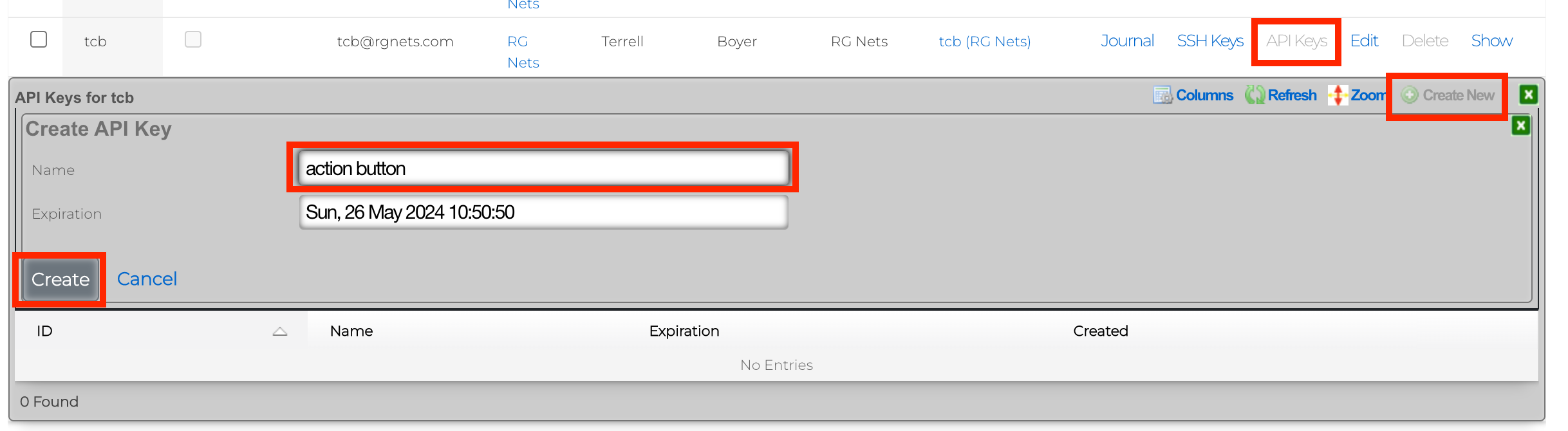

1) On the rXg browse to System >> Admins 2) Next to your admin account, click on the API Keys link. 3) Click Create New 4) Provide the API Key a name and click Create.

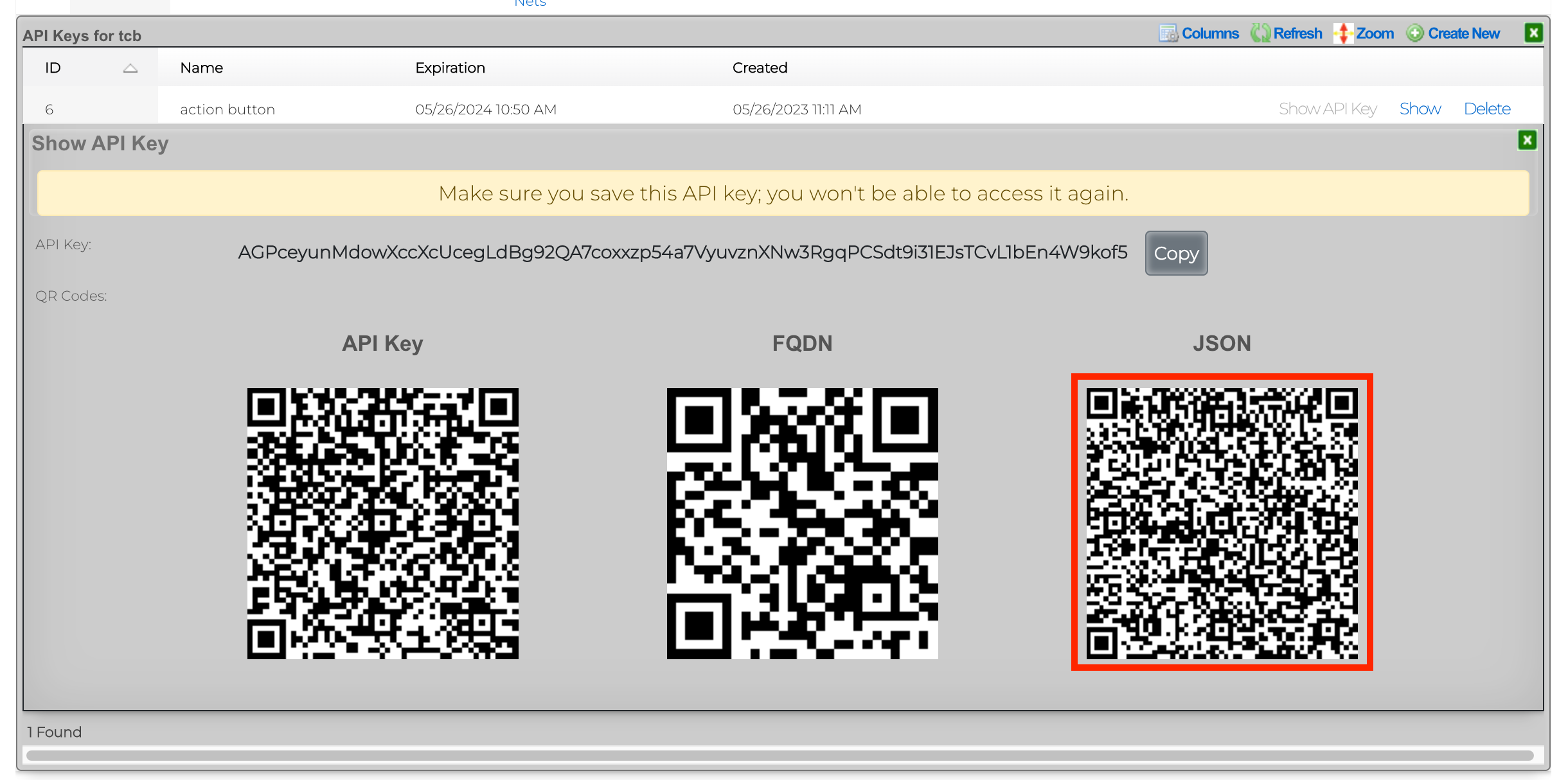

5) You will be presented with several QR codes. Make sure to leave this screen up.

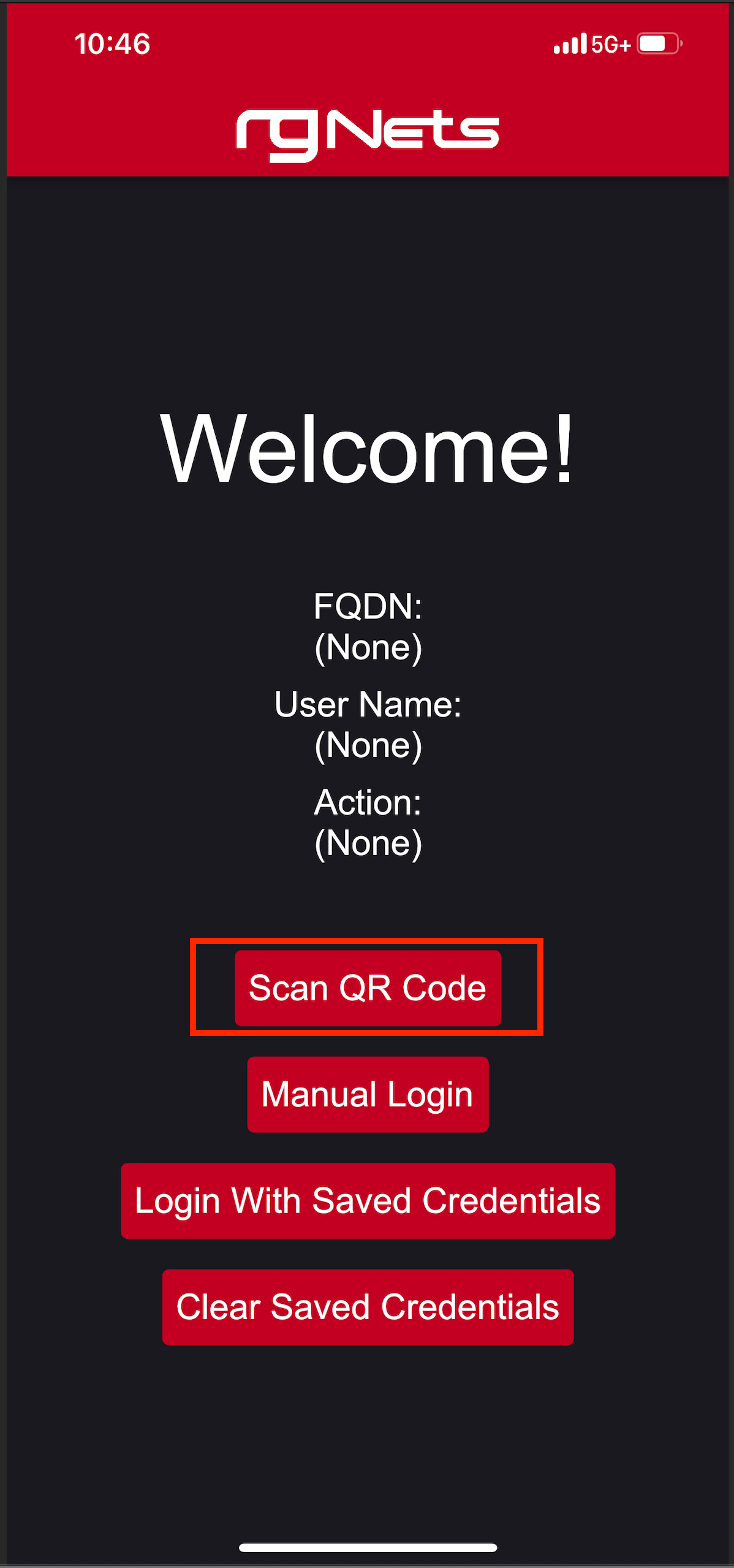

6) From the mobile app, click Scan QR Code.

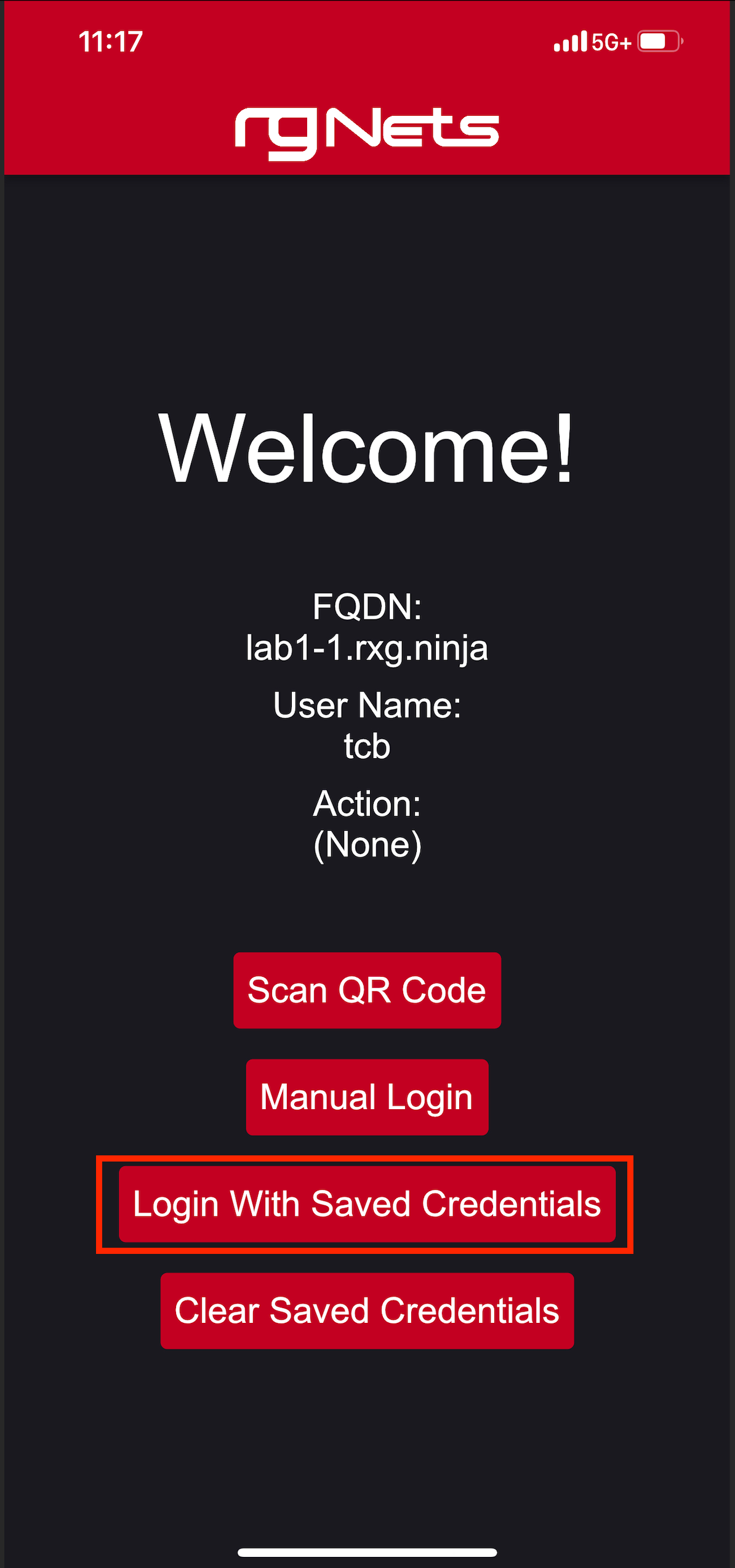

7) Scan the QR code labeled JSON. 8) Click Login With Saved Credentials

9) Set the action to toggle_ssid 10) Click on the slider to enable the button.

Now, simply press the red action button to toggle the SSID from basketball_arena to hockey_arena. These changes will be pushed to the wireless controller via config sync.

This is a very simple example of what can be done using a combination of a mobile application and the rXg backend scripts.